I have always been a fan of electrons in airplanes. The more technology, the better, if you ask me. But I've been burned a few times and I take careful note of whenever the 'trons turn evil. When introduced to some new piece of electronic bling, I tend to be skeptical.

— James Albright

Updated:

2018-06-20

A little honest skepticism is healthy in airplanes. I worry about younger pilots who never had to live without the high tech and may not be adequately armed to realize when high tech turns bad; and even when they do, they may not have the tools to fix what is broken.

So let's take a 40 year journey to see how one pilot (me) learned to develop a healthy amount of skepticism about all things tech, but also to embrace the tech when it makes things safer. I'll throw in a few of the more notable accidents as well as some pretty nice saves. And then, please allow me to give you a few techniques on how to be a better consumer of those electrons.

I do, by the way, collect techniques. If you have a better way, or a new way to keep the electrons honest, please hit the "contact" button on the top or bottom of this page and let me know.

2 — FOQA: the ultimate "big brother"

1

Forty years of technology

I had 25 hours in a Cessna 150 when I showed up to fly the Cessna T-37B in USAF pilot training in 1979. With each new aircraft came new technology and life got easier in several ways, but harder in others. The lessons, fortunately, are cumulative. What you learn from one airplane often transfers to the next. You just have to see the connections.

The T-37B

In the seventies and eighties, Air Force pilots began their jet training in the mighty T-37B, an airplane that was said to be designed to convert JP-4 to noise. We called it the 5,000 pound dog whistle.

When I started the airplane's attitude indicator was a simple black sphere with a white line through the horizon, but we eventually got one with blue sky and black earth. You had to keep the airplane right-side-up looking at this instrument, while monitoring the heading on another, and the course on a simple RMI. But there was even more to it than that. Have you ever heard of a shotgun cockpit?

You make a shotgun cockpit by taking a sheet of aluminum and blasting it with a shotgun. You then put an instrument, switch, light bulb, or something else in every hole you find. Easy.

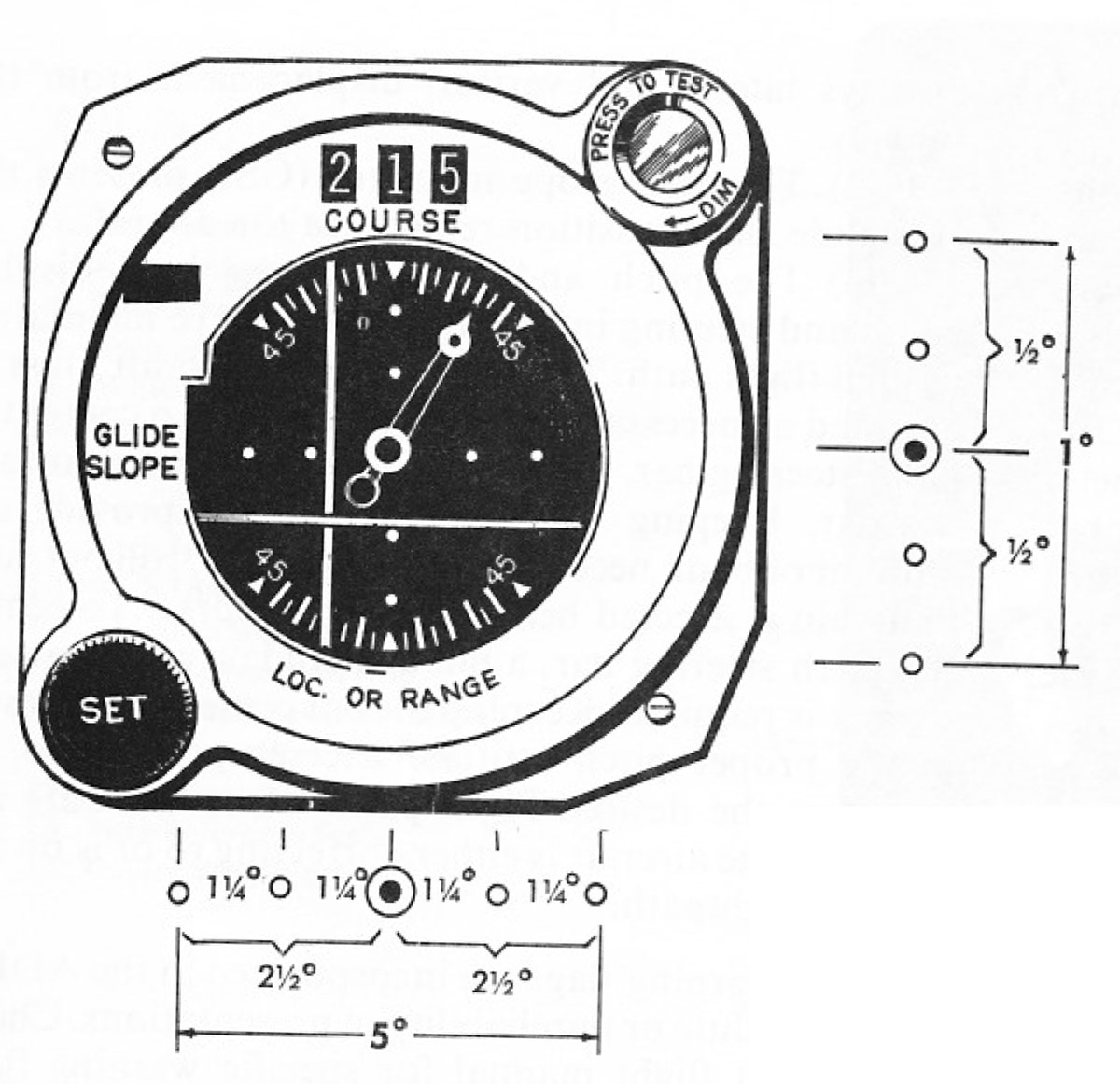

The T-37B had a course indicator; I never saw one of those before and I thought it was pretty neat, but . . .

. . . the course needle didn't always correspond to the course arrow, depending on if you were flying inbound or outbound, depending on what course you had dialed in, and depending if you were flying a front course or a back course. It sometimes seemed the instrument came with so many procedures and exceptions to those procedures that it was designed to trick an unsuspecting student into making mistakes.

If you had the course set knob dialed incorrectly, the needle could actually operate opposite the arrow. Attempting to correct to course would do the opposite. I did that once on a training sortie with a terrain chart jammed between my visor and helmet to keep me from peeking. I thought I heard the instructor laughing and figured out what was wrong before it was too late.

Lesson learned: an incorrect pilot input can corrupt an instrument's output.

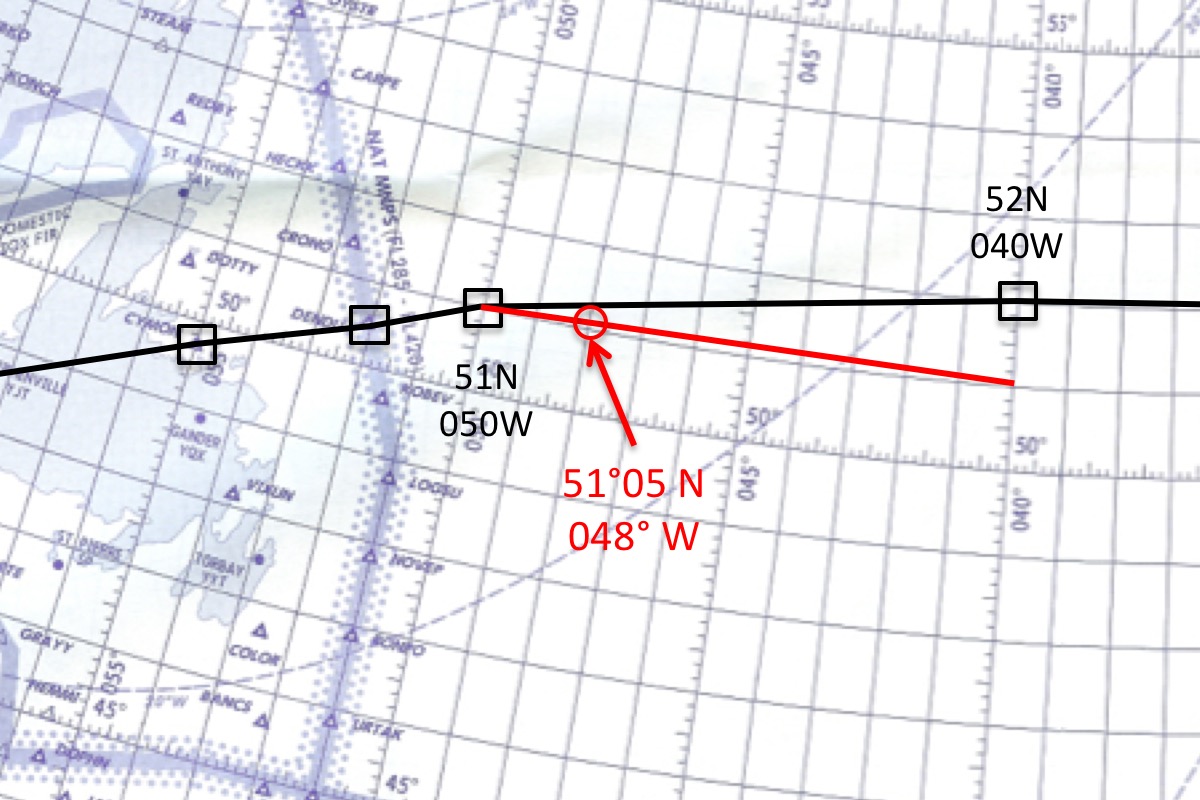

Perhaps the most common example of an input/output error is the one-degree error resulting from getting one key wrong on an FMS input. These days most of us download our oceanic flight plans and the chances of making programming errors are greatly reduced. But we often get last minute re-routes where getting one key wrong can send you off course before you know it. The FMS happily flies to the wrong waypoint and your displays all show you on course. That's because you are on course! On course to the wrong waypoint. I've done this once, about 20 years ago. Fortunately I checked my position two minutes after waypoint passage and caught the error before we were beyond the legal course width limits in place back then. Nobody knows I did this. (Except you.)

The T-38A

Once we graduated from T-37s, the Air Force thought we had become instrument pilots and immediately shifted our focus to formation and aerobatics designed to teach dog fighting techniques and other "stuff of righteousness." And if we survived all that, they threw us back into instruments.

The T-38A had a flight director; I never saw one of those before and I thought it was pretty neat, but . . .

. . . we quickly learned that the flight director wasn't smart enough to ensure a course intercept would occur before or after the desired fix and that keeping your eyes exclusively on the aircraft attitude indicator could deprive you of other information also vital to flight: such as airspeed and an altimeter heading south. I once flew the world's best ILS ever flown by a 22-year old. It was perfect until the wings started to buffet and I learned the value of sitting on top of two afterburners.

Lesson learned: No amount of technology removes the requirement to keep up an instrument scan.

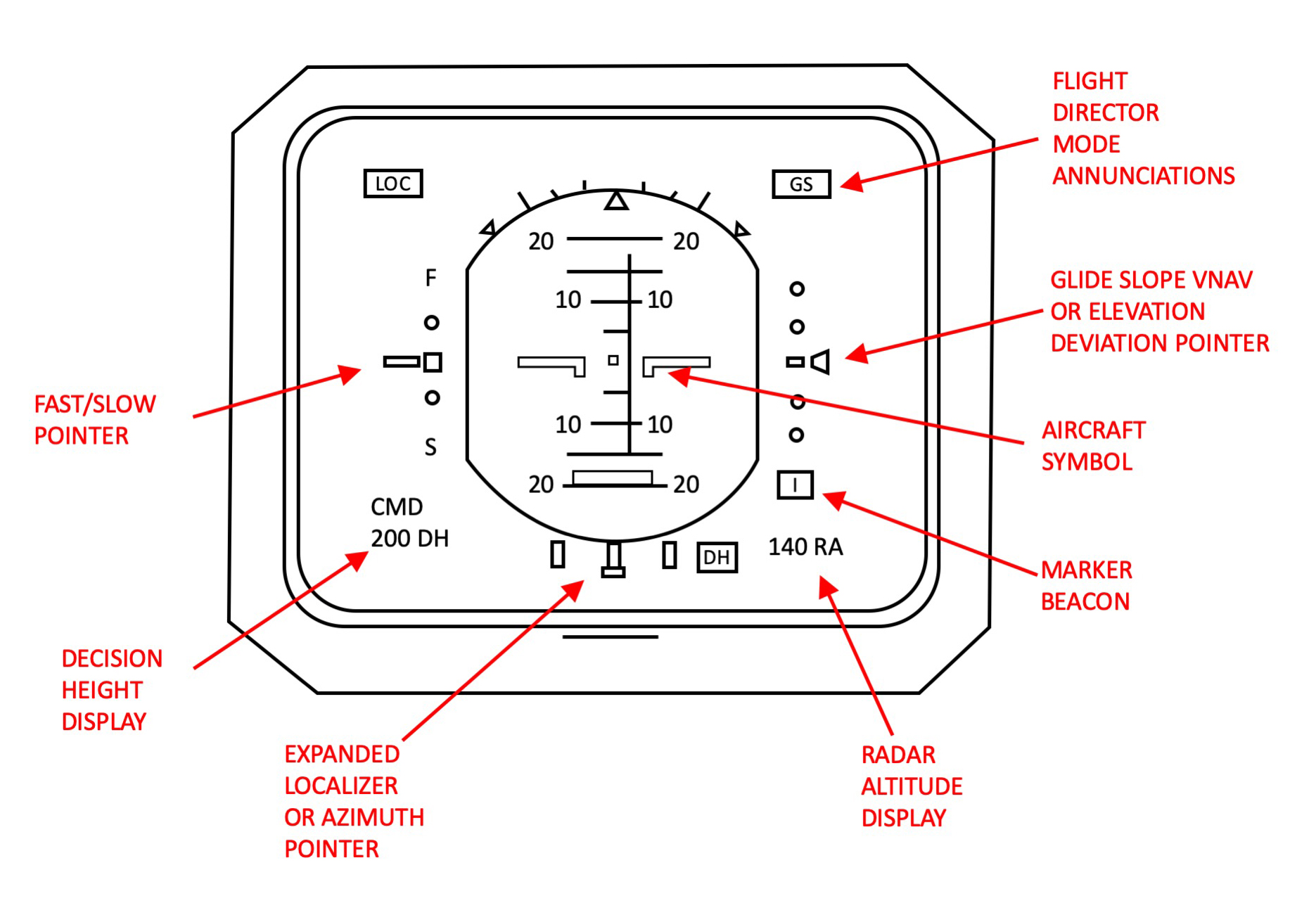

The NTSB accident docket is filled with crashes that could have been prevented with a better instrument cross check. The one that hits closest to home for me was the case of N85VT. This Gulfstream III was on final approach to Houston Hobby in 2004, flying what should have been a simple ILS. The pilots made a lot of mistakes along the way to crashing, including failing to set the ILS frequency. The pilot flew a VOR as if it were the ILS, confusing the fast/slow pointer for the glide slope pointer. (This was easy to do back then, there wasn't a lot of consistency in the layout of flight instruments and the glide slope pointer was sometimes on the left, sometimes on the right.) Part of any ILS should include a scan of available distance cues such as DME or marker beacons. Here's a good rule of thumb: if an ILS requires more than 1,000 feet per minute descent rate, you are in one of several situations: (1) you have an unsafe tailwind, (2) you are flying too fast for a safe approach, (3) you aren't flying on glide slope, or (4) you are flying an SR-71. Note that only one of those options is safe. More about this: Gulfstream III N85VT.

The KC-135A

After earning my wings as an Air Force pilot, I became an Air Force copilot in the KC-135A. I had gone from 12,500 pounds of airplane sitting on top of two afterburners, to a 300,000 pound airplane strapped to four very anemic water-injected turbo jets.

The KC-135A had an autopilot; I never saw one of those before and thought it was pretty neat, but . . .

. . . the autopilot was very old technology that was little more than a wing leveler with a rudimentary course follower. The first KC-135A went operational in 1955, a year before I was born. By the time I got to it, in 1980, 57 of them had crashed. Twelve of those during takeoff. There was a warning in the flight manual that misjudging the rotation by a half a degree could keep the airplane from flying at some weights. The Air Force installed a very nice flight director with a TO/GA (Takeoff / Go Around) to fix that, but they left the autopilot alone.

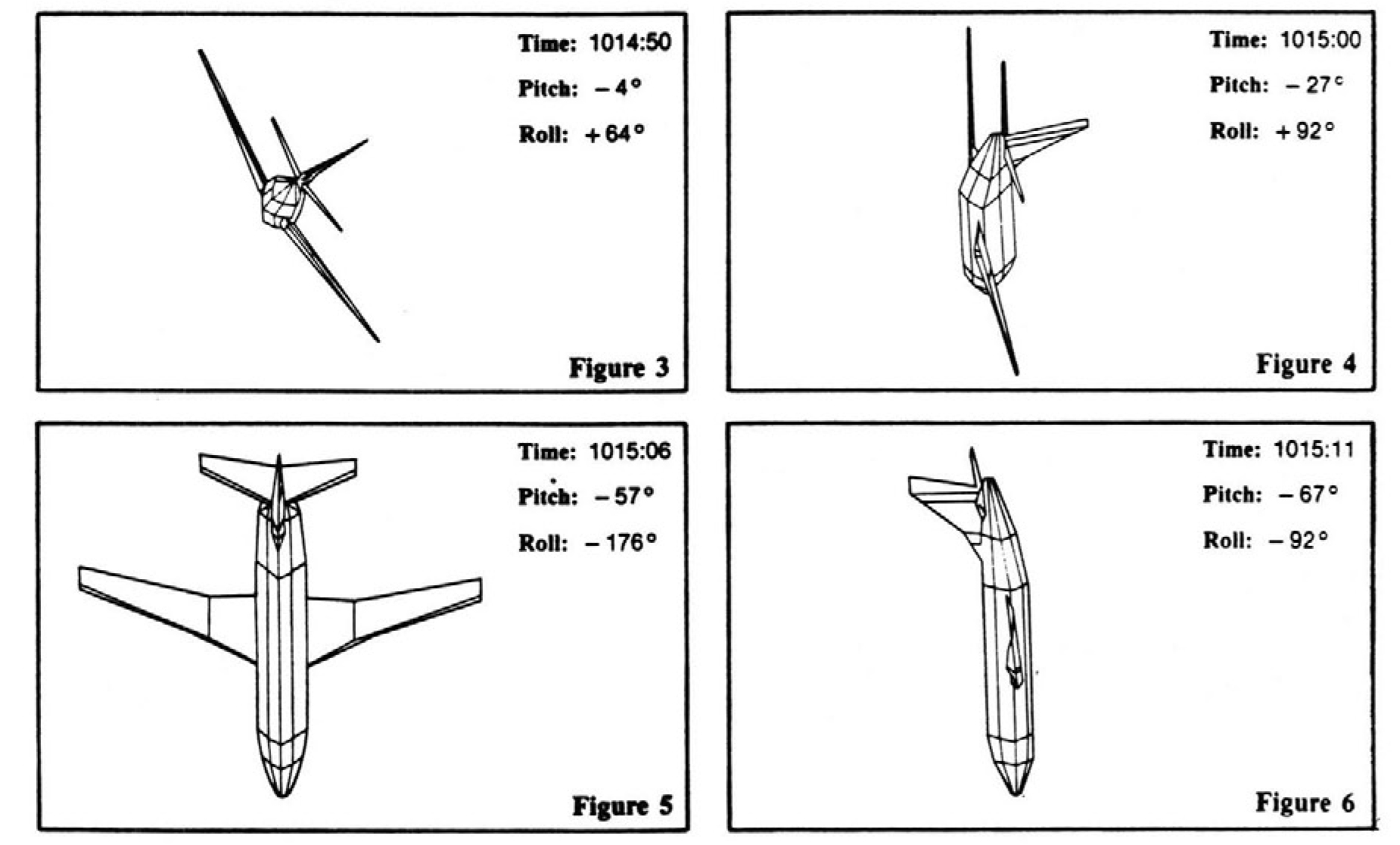

I left the airplane in 1982. Two years later the crew of a KC-135 were flying in the weather, straight and level, when the navigator requested a turn. The pilot turned the autopilot aileron control full scale to make a 30° bank turn and then returned his attention to the paperwork he had been working on. The copilot was heads down copying a message from the HF radio, one of those things they did back in the cold war. The first person in the cockpit to notice a problem was the navigator who saw the altimeter unwinding itself at a rapid pace. He simply said, "check altitude." The pilot looked forward to see his attitude indicator's horizon line was all brown except for a thin blue line at an angle to one side. He didn't realize that this attitude indicator was good for 360° of pitch and roll, something unusual for the time, and that the thin blue line would always point to the sky. So instead of rolling to the sky, he pulled back and executed what amounted to a Split-S. They popped out of the weather just under 10,000 feet. No one on the airplane was hurt but the airplane was heavily damaged.

Lesson learned: Know what the automation should do for any normal or abnormal situation, and take over if it doesn't perform as expected.

A year before I was to fly the Boeing 747, China Airlines almost destroyed one with 274 people on board. En route to Los Angeles, a turbulence-induced airspeed excursion caused the autothrottles to bring all four engines to a lower setting and then to a high setting. The #4 engine "hung" near idle as numbers 1, 2, and 3 accelerated normally. The flight engineer attempted a relight at altitude, FL 410, but the correct procedure would have been to descend to FL 300 or below.

As engines #1, #2, and #3 went to full power and the #4 to sub idle, the aircraft banked to the right, as would be expected. The captain did not add corrective rudder and instead kept the autopilot engaged and focused on the decaying airspeed. They requested and were cleared to a lower altitude, which the pilot selected on the autopilot, keeping it in control with no rudder input. When he finally disengaged the autopilot, still with no rudder input, the aircraft snapped right and steepened its dive into the clouds.

The aircraft lost over 10,000 feet before they departed controlled flight and then another 10,000 feet with the pilot pulling as much as 5 Gs in an attempt to recover. He was not able to recover until they popped below the clouds at 10,000 feet. Nobody was seriously injured except the airplane, which was eventually repaired. The captain was hailed as a hero until the flight data recorder revealed that he was anything but.

More about this: China Airlines 006.

The Boeing 747

I went from the KC-135A to the EC-135J, a Boeing 707, where the cockpit avionics were marginally better. After five years of that I got the call to fly this beast . . .

In 1986, the Boeing 747 was quite the machine and it had triple INS. I never saw such a thing before and I thought it was pretty neat, but . . .

. . . each INS could only hold nine waypoints at a time, and each waypoint had to be entered using a ten-button keypad. It was common practice to enter a replacement waypoint as soon as the old waypoint was history. If you passed waypoint six, for example, the FROM/TO window would display "78" as shown here. One day I was flying from Anchorage, Alaska, to Tokyo, Japan when the navigator typed in one of these waypoints but got the "W" and "E" mixed up in the longitude. He was used to typing "W" because that's where most of our flying was. But he made the mistake and I didn't catch it. He was a professional, after all, I didn't want to hurt his feelings, after all. He never made that mistake before, after all. Two hours after his mistake, while flying abeam Kamchatka, Peninsula, the airplane made an abrupt turn to the right, toward Russian airspace. You history buffs know that we weren't the best of friends with the Russians in 1988 and five years before this the Russians shot down an airliner Boeing 747 over the exact same airspace. I clicked off the airplane and got back to the oceanic track before anyone noticed. I hope.

Lesson learned: Critical data entry should be entered by one cockpit crewmember and checked by another.

The Gulfstream III

A few years later I moved over to an Air Force Gulfstream III.

In 1992 these Gulfstream IIIs were pretty new and had laser ring gyro INS. I never saw one of these before and I thought it was pretty neat, but . . .

. . . the airplane still had the simple nine waypoint system that didn't understand what an airway was and we didn't have a navigator or flight engineer to reduce the cockpit workload. We quite often flight planned as best we could, hoping we didn't miss anything.

In 1993 I was flying deep inside Russia on a route I had picked out on a jet chart and that had been blessed by the Department of Defense, was Russian air traffic control approved, and that my laser ring gyros were happily navigating to. I had on board five U.S. senators hoping to broker a peace treaty between parts of the disintegrating Soviet Union. We were precisely on course. All of a sudden the guard channel was filled with yelling and screaming. The only thing said in English was our call sign. I studied the name of each waypoint along our course and recognized the one they were screaming about. I realized I had also seen it in a Tom Clancy novel I had just read. Circumnavigating that point calmed everyone down. After we landed I found out that point was one of only two active Anti Ballistic Missile sites in existence.

Lesson learned: Just because the needles are happy doesn't mean you can relax.

The Challenger 604

My first civilian job was with Compaq Computer flying this aircraft and one other just like it.

In the year 2000 the Challenger 604 was fairly new and came with two or three Flight Management Systems. I never flew with an FMS before and I thought it was pretty neat. But . . .

. . . I noticed many of the pilots in our flight department had forgotten basic navigation skills and were, in their own words, FMS cripples. They couldn't fly without at least one of the FMSs. I vowed I would never be like that. I soon found myself as the flight department's international procedures instructor and flew to Europe with a retired Air Force F-16 pilot. He had never crossed the Atlantic except strapped to his fighter's ejection seat, wearing an anti-exposure suit, breathing oxygen, and on the wing of a tanker. He was happy as a clam in the left seat going east. But on the return trip he was pretty nervous about the fuel. I did my best to make him feel comfortable, even after we found out the weather at Gander was at minimums. I told him it wasn't a problem since Stephenville was always up when Gander was down, and vice versa.

As our flight progressed, it was becoming obvious that Stephenville's weather was getting worse while Gander wasn't improving. We had a strong headwind and our gas situation was getting worse too. Right around 30 west, we still had 1100 nm to go and the box was predicting we would land with 4,500 lbs. of fuel. That wasn't bad on a good day, but the weather wasn't good. Gander oceanic called minutes later announcing both our destination and alternate were below minimums and asked us what we wanted to do. They helpfully told us Halifax was clear and a million. "Let's go to Halifax." I showed the younger pilot how to reprogram the FMS for a new destination. You simply type in the new airport destination over the old, and replace any applicable waypoints. "Easy!" The FMS turned us left slightly and told us we would be landing with about 5,000 lbs of fuel, much better. I knew Halifax was another 400 nm, but putting the wind off the wing helped a lot. We passed along our new ETA to Gander Oceanic and everything was good again, for about ten minutes. The SELCAL went off and Gander asked us to recompute our ETA. The copilot read back the ETA according to the FMS again. Five minutes later they called again and asked us to figure it out again. The copilot looked at me and said, "Do they think I'm lying? This is was all three FMSs are saying."

I did what I usually do when the FMS confuses me, I stared at it for a minute or so. The numbers seemed about right. Then I took the distance remaining to Halifax, according to the FMS, and then I divided that by our current ground speed. We had 1,300 nm to go and our ground speed was 420, so the math comes to 3 hours and 6 minutes. The FMS predicted 2 hours and 46 minutes, 20 minutes less. We passed along the longer ETA and Gander Oceanic was happy again. I spend the next hour looking at the FMS. I finally figured it out. On at least that FMS (and probably others), if you enter individual leg winds for a flight and then change the destination, all the winds for the remaining legs go to zero. The FMS threw out a 50 knot headwind, fooling us into thinking we would get there sooner and burn less gas.

Lesson learned: Critical decisions should not be left to the electrons without a reasonableness check.

So let's look at this. Even if you are a math-phobic, this is easy math you can do. Besides, knowing how to compute an ETA from groundspeed and distance remaining is a critical skill. The first problem is remembering the formula. You know how speed works in a car, right? It's miles per hour, now you can always figure out the formula on your own . . .

Of course we are concerned with nautical miles per hour (knots), so:

A mathematician will tell you that you can multiple both sides of the equation by the same thing and not change the equality. Or you can just remember you can swap the "Knots" and the "hour" and the formula is still true:

So, in our example, 1300 nautical miles divided by a ground speed of 420 knots comes to 3.1 hours. A tenth of an hour (60 / 10) is six minutes so our ETA is 3 hours and 6 minutes.

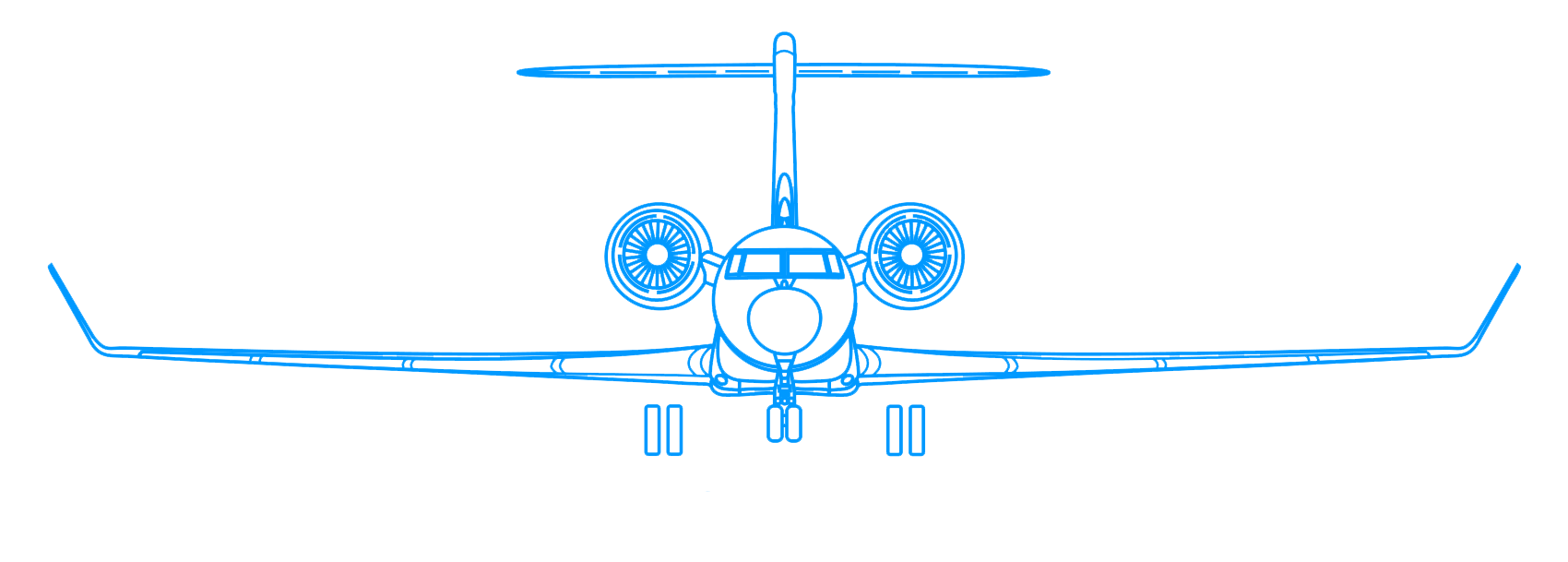

Gulfstream V

After two Challenger 604 flight departments I found my way back to the Gulfstream, this time in the GV.

The GV has one of the best looking wings in aviation and that gave it a tremendous slow speed capability. On top of every GII, GIII, GIV, and GV wing are a set of ground spoilers that are designed to pop up automatically on landing, once both the airplane's main landing gear are firmly on the ground. The weight on wheels switches from both mains had to agree the airplane was on the ground for the spoilers to come up. (Having the ground spoilers come up during flight would give the airplane the glide properties of a Toyota Tundra.) This was a prospect that filled most older Gulfstream pilots with dread. But the GV also included a number of fancy electronics that kept us from hurting ourselves. The GV has a "Fault Warning Computer." I never had one of those before and I thought it was pretty neat, except . . .

. . . some pilots didn't understand that the Fault Warning Computer could warn you about a problem in the ground spoiler safety system, but it couldn't prevent you from doing something stupid. In 2002, a crew departed the Gulfstream service center at West Palm Beach International Airport. A mechanic had disabled the main gear weight on wheel switches with wooden tongue depressors for a check he was doing, but forgot to remove the sticks. Neither pilot caught the sticks during their exterior inspection and neither pilot ran the required checklist for the situation. Then they chopped the power at 57 feet for the landing — a lousy technique in a GV — and those spoilers came up. The GV turned into a Toyota Tundra and hit so hard the struts penetrated the wing. Nobody was hurt but the airplane has never flown again.

More about this: Gulfstream V N777TY.

You can imagine crashing a 40 million dollar aircraft doesn't do your career much good. One of the pilots ended up as a simulator instructor where I attended a recurrent. Some of their instructors were still teaching that the Fault Warning Computer would prevent such a thing from happening. Some guys just never get the word.

Lesson learned: Even the most sophisticated electronic warning system lacks the processing power of the human pilot; there are times when over reliance on the former can make the latter impotent.

Gulfstream G450

After the GV I ended up flying a GIV for a year as a lead-in to a G450.

The G450 has a Forward Looking Infrared camera and a HUD. I had never flown with those before and I thought it was pretty neat, but . . .

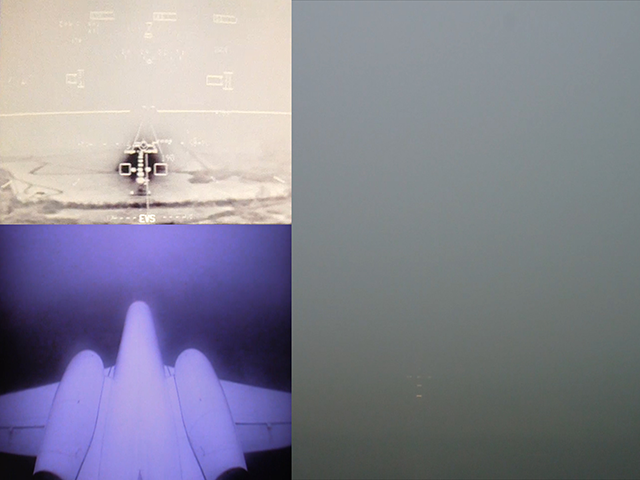

. . . the FLIR was claimed to be the magic tool to get you down to 100 feet even better than a Cat II ILS. You can see through clouds, after all. But in reality, this generation of FLIR requires a very warm heat source on the ground and very little water content in the air. This trio of photos was taken on a 15 minute test flight in November of 2013 where the ground was still warm and the fog had just moved in. I was so surprise the FLIR did what is was supposed to that I said to the other pilot at the time, "Believe it or not, I've got the EVS lights!"

Lesson learned: Just because the book says the tech will do it, doesn't mean it will always do it, that it will do it every time, or at all.

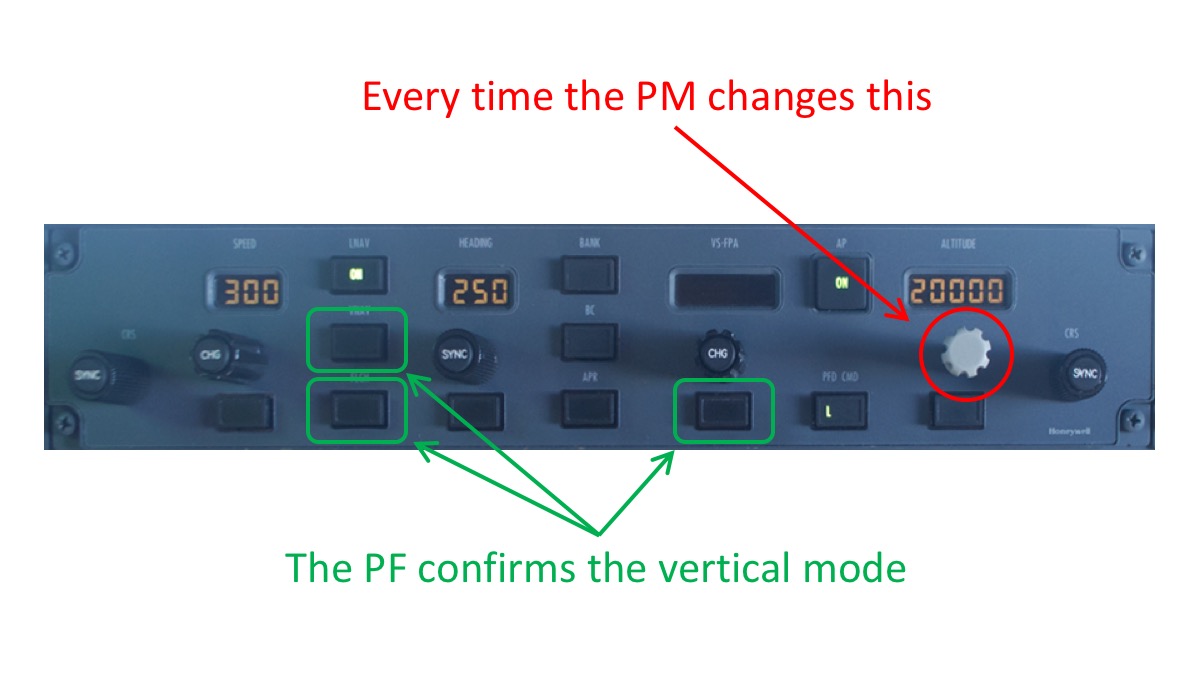

We quite often see things in our computer-driven airplanes that only occur randomly and sometimes only once. But most of the time there is an explanation and it takes us a while to track it down. When we had less than six months with the new bird it flew right through the altitude in the altitude select window. Center gave us a stern warning and we felt embarrassed at the time. Embarrassed isn't strong enough a word. I spent the next few months trying to figure it out. It only happened after we got recleared to a new altitude before completing the climb or descent. But it didn't always happen. But because we had become so paranoid we did figure it out. The autopilot on this airplane might capture an altitude several hundred or even a thousand feet before reaching the selected altitude. At that point it would go into a pitch hold mode. If you dialed in a new altitude without selected another vertical mode, the autopilot will be happy to hold that pitch until you run out of airspeed going up or sky going down.

I call this the "vertical mode trap" and several Gulfstream company pilots admitted to me it is a problem in the G450. But they also tell me it has been fixed in the G500 and G600. That makes me wonder about the G550 and G650.

2

FOQA: the ultimate "big brother"

The Gulfstream G450 also included my introduction to a Flight Operations Quality Assurance (FOQA) system. I canvassed my fellow pilots for hints and techniques for making the best of it. I was surprised how some pilots reacted to their FOQA, and even more surprised at my own learning curve to accept what the magical box was telling me.

After having flown with it for five years now, I am a believer. FOQA will make you a better pilot. Here is an example from our first FOQA report.

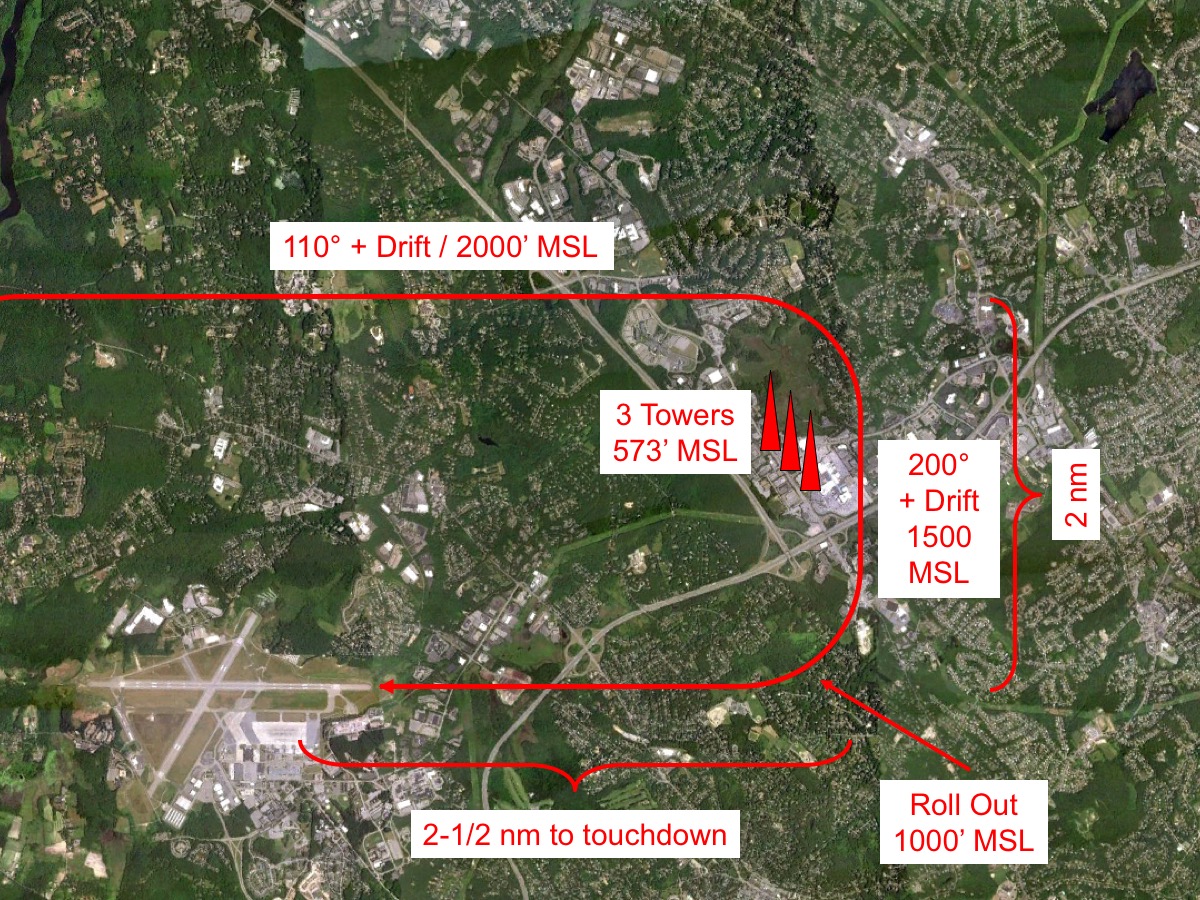

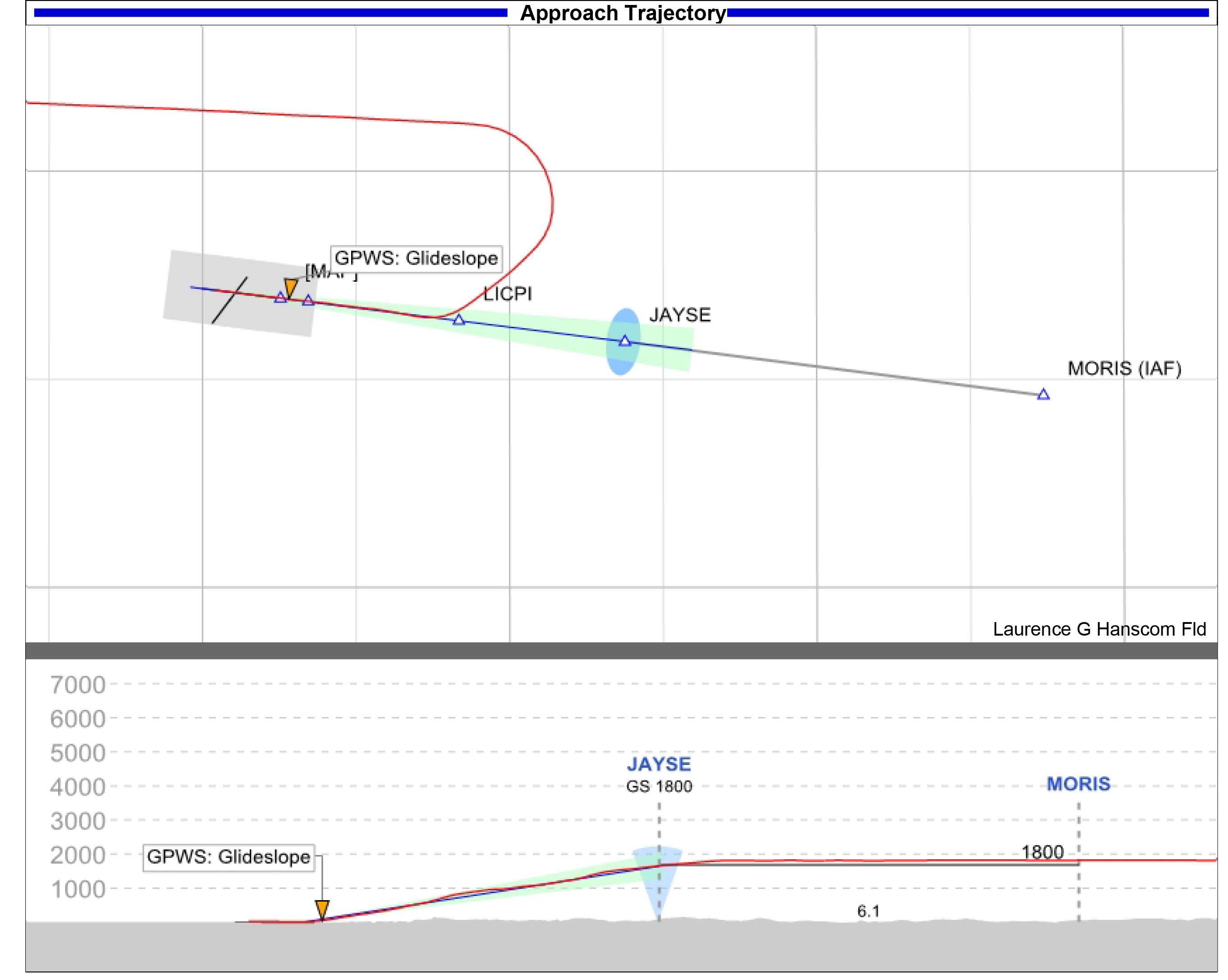

If you've ever flown a visual approach to Runway 29 at Hanscom Field, Bedford, Massachusetts (KBED) you will have seen the three towers abeam a 2-1/2 nm final, just to the north. I had always flown my base just inside the towers, allowing me to roll out at 2 miles and about 600 feet for a nice, stable final approach. Or so I thought.

Everything had to be just right to make a roll out at 2 miles and 600 feet, and things rarely work out "just right." I heard from another Gulfstream operator that the FOQA didn't like you joining the ILS below the glide path so your choices were to ensure the ILS wasn't tuned or to ignore the FOQA report. So that was my plan until I got our first report.

We were actually joining the glide slope at 400 feet and sometimes as low as 300 feet. The effort often ended up in a very sloppy looking pattern. We worked this through our SMS program and came up with the conclusion that flying outside the towers only added a half mile but made the entire pattern much safer. We no longer have a problem getting a stable approach on a visual to Runway 29 at Bedford.

The experience taught us to be more open to looking at what we have always assumed was the safest way to do things, especially when FOQA begs to differ. Besides helping us with more stable approaches, it has made us better at always landing in the touchdown zone. In short it has made us better pilots. With very few exceptions, if you look at a FOQA squawk honestly you will probably find that you need to rethink what you are doing.

I've heard recently about a flight department that was having overspeed issues with their flaps during takeoff probably because the Falcon 2000LX is such an overpowered beast. The person telling me the story says FOQA cited them for overspeeding the flaps 16 times in a reporting period. Since they became aware they used their safety management system to investigate fixes. The cure involved aircraft service bulletins, upgrades to their checklists, and adding to required recurrent syllabus items. As a result, they were able to reduce the number of overspeeds to just 3 in the next reporting period.

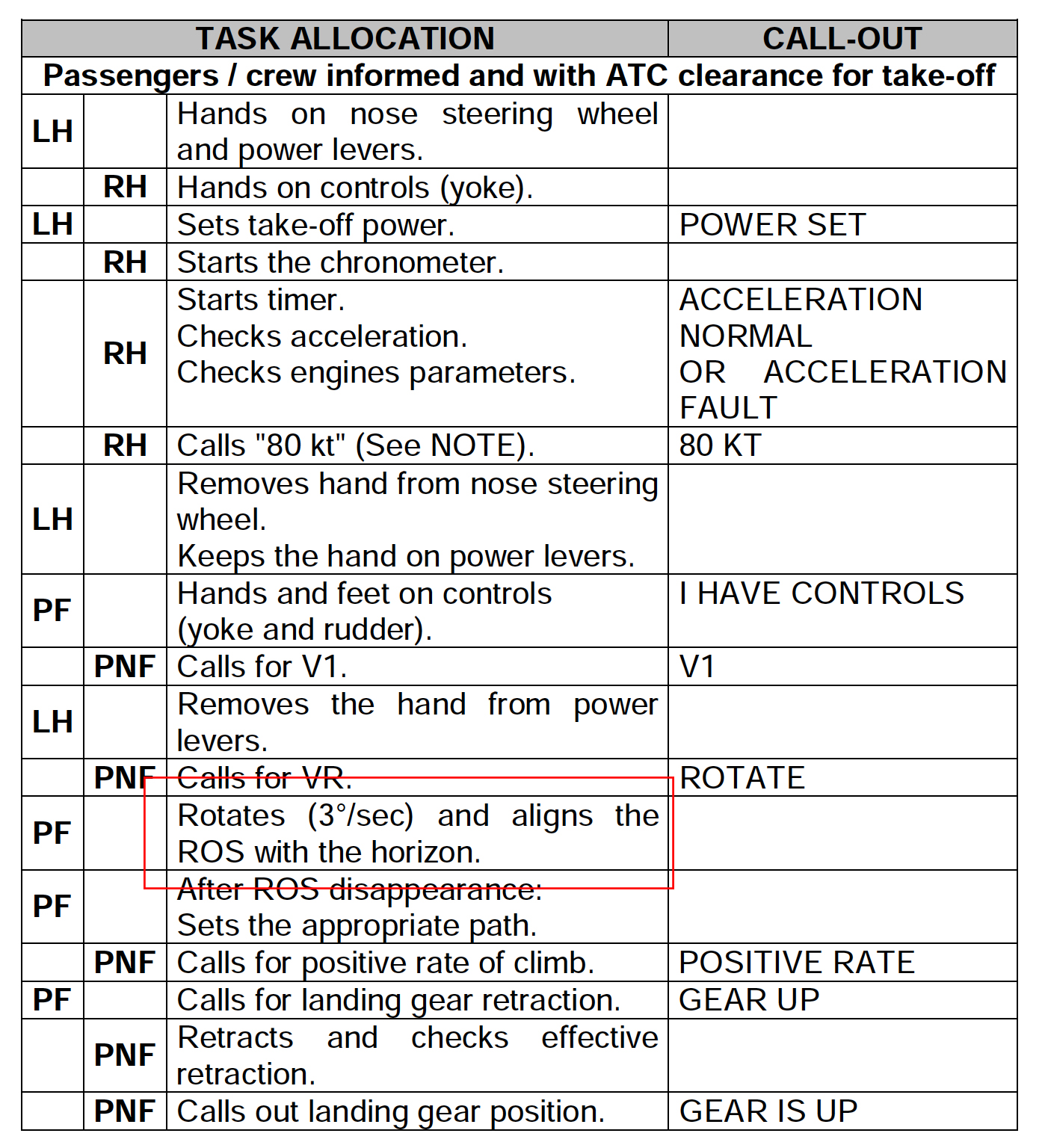

So this was a case of FOQA bringing to light the need for several changes in the aircraft and procedure. I've never flown a Falcon, except in the jumpseat. But a common technique of many pilots in the business of flying passengers is to rotate slowly and to an angle that is less than recommended by the manufacturer. When I was at the 89th Airlift Wing at Andrews I think half our pilots were of this mindset. My attitude is a bit different. I think there are things our passengers will have to accept when defying gravity and one of those is that during takeoff you will be accelerating upward as well as forward. If your manual gives you a rotation rate and angle to beat obstacles, you should be well schooled at doing it exactly that way. As is turns out the Falcon 2000 does have a specified rotation rate and angle.

3

Automation resource management

I used to preach paranoia when it came to cockpit technology, thinking that if you always suspected the 'trons were trying to kill you, you would be better able to defend yourself. But that was before the days of flying airplanes that simply cannot fly without them. I've now adopted the philosophy of treating cockpit technology the same as you would a brand new member of your crew.

With each new generation of aircraft we are treated to levels of automation unparalleled in performance and reliability. For those of us who first flew with autopilots capable of little more than holding a heading or an altitude, a system that can fly a radius-to-fix approach to a 0.1-nm Required Navigation Performance (RNP) is truly a step into a new universe.

But in all that time we’ve not progressed from thinking about the electrons-to-neurons interface as anything more than another chapter in cockpit appliances. It is time to consider cockpit automation as another pilot, one capable of routine brilliance but occasional catastrophe. That electronic pilot needs the same crosscheck as its flesh and blood counterparts. Crew Resource Management (CRM) can provide lessons on managing our digital colleague.

On many modern aircraft, the FMS, the flight director, and the autopilot have become integral parts of the aircraft and you simply cannot fly without them. But they tend to be so reliable that when they make a mistake, you may not even know it, you may be so surprised you freeze up in confusion, or you might find yourself not knowing how to fix what the electrons have broken.

- Like with the case of the one-degree oceanic waypoint error, looking at a cockpit display that is drawn by the FMS is not a valid check of the FMS. One is simply using the same data as the other. If the aircraft symbol in your attitude indicator is neatly tucked away inside your flight director command bars, you might have a problem if those command bars are driving you into a mountain. Unless you avail yourself of other forms of input, you cannot evaluate the output of your technology.

- The easiest technology error to detect is one that happens suddenly, such as several displays going blank at the same time. The symptoms of the problem are obvious, but the sudden shock can leave you frozen. Human Factors engineers call this the "startle effect." These situations may call for immediate pilot action, but unless the pilot is ready for it, the action may not be timely enough.

- Some of these electrons are smarter than we can ever be, can fly with a precision we can never match, and can do all these things for hours on end. Until they don't. But if you are flying between the hills in Gastineu Channel on an IFR approach to Juneau International Airport, Alaska, what can you do when the RNAV(RNP) approach navigating you plus or minus 0.15 nm (912 feet), how can you possible react other than smashing the go around button and climbing as quickly as possible?

The Flight Safety Foundation's "Golden Rules" for automation was published in 2009 offers some sound guidance, but perhaps needs an update:

- Automated aircraft can be flown like any other aircraft.

- Aviate, navigate, communicate, and manage — in that order.

- Implement task-sharing and backup.

- When things do not go as expected, take control.

I am sure the point they are trying to make here is that when all else fails, you can keep the airplane out of the dirt if you use your basic "stick and rudder" skills to keep the airplane right side up. But it should not be taken to mean you can do anything the airplane can do. You cannot, for example, hand-fly the airplane in RVSM airspace. You cannot hand-fly to minimums on some types of instrument approaches. But more importantly, there are situations where you can legally do something, but probably shouldn't.

Once again, this remain a good philosophy for AFTER things have gone horribly wrong. As the situation evolves, you are better off dividing your attention as needed. If the airplane is doing a good job of aviating and navigating, perhaps devoting a little more time to managing the technology will prevent a minor problem from escalating into one where aviating or navigating are in doubt.

Remember that the autopilot is another member of your crew, more on this to come. Sometimes the autopilot is the best pilot on the flight deck to "do" while the human pilots devote time to monitoring, planning, and thinking ahead.

The stereotypical "click, click" should not be your first reaction to a problem. The "click, click" may actually be your worst option. (More about this below.)

Level reversion / stepping back, automation shedding

The modern response to handling automation problems is to dial back the level of automation to gradually return duties from electrons to neurons — from avionics to humans — to ease the sudden cognitive burden placed on the pilot. This approach has been variously called “level reversion,” “stepping back” or “automation shedding.” The key point is you turn off the most complex items first, continuing further until the problem is resolved.

The Flight Safety Foundation’s (FSF) Approach and Landing Accident Reduction (ALAR) Tool Kit Briefing Note 1.2 offers the following example any time the aircraft does not follow the desired flight path and/or airspeed:

- Revert from FMS to selected modes;

- Disengage the autopilot and follow flight director guidance;

- Disengage the flight director, select the flight path vector (as available) and fly raw data or fly visually (in VMC); and/or,

- Disengage the autothrottles and control the thrust manually.

In principle this approach seems to be just what is needed, and yet we continue to see examples of pilots being unable to quickly resolve situations by incrementally lowering automation levels. In fact, when overwhelmed, pilots may be inclined to revert their levels all the way. The FSF’s “Golden Rules” encourage a measured approach to level reversion, and conclude with “when things do not go as expected, take control.

This reaction is certainly appropriate if the automation is about to lose control of the airplane or fly it into a mountain. But what if the severity of the situation isn’t clear-cut and the automation failure isn’t black and white? Then how can pilots detect the problem, devise a solution, and get the airplane back to where it needs to be? In some automation accidents, shedding the highest level of automation wasn’t the right answer.

Case Study: Turkish Airlines Flight TK-1951

Side view, Turkish TK-1951, from Dutch Safety Board Report, Illustration 5.

On February 25, 2009, Turkish Airlines Flight TK-1951 crashed short of Runway 18R at Amsterdam-Schiphol International Airport (EHAM), Netherlands. The Dutch Safety Board concluded that the accident “was the result of a convergence of circumstances” that included a malfunctioning radio altimeter. We might suspect a radio altimeter to play a pivotal role during a Category III ILS where it would guide the airplane to the runway during its last few feet in near zero-zero fog. But the weather, 1,000 to 2,500 feet overcast ceilings with a visibility of 4,500 meters, was not a factor for Turkish TK-1951. So how could the radio altimeter have caused this crash?

The airplane was being flown from the right seat by a first officer receiving route training by a highly qualified captain. A second first officer sat in the jump seat as a safety observer. This Boeing had two autopilots, two radio altimeters, and a single autothrottle system. It was being flown using the right autopilot, as might be expected with the airplane being controlled from the right seat. The autopilots cannot be engaged simultaneously unless an ILS frequency is tuned and the approach mode is selected. The autothrottles are usable from takeoff to landing and automatically retard once the airplane is over the runway below 27 feet. There are a variety of Boeing 737 autothrottle systems, most of which marry the autothrottle to the same side radio altimeter as the controlling autopilot. But in the accident aircraft, the autothrottle was linked to the left radio altimeter unless it was not working. These connection variables were not documented in any manuals available to the crew.

The arriving flight was vectored in for a “short line up,” bringing them onto final approach three miles inside a normal intercept point. This would get them to the runway sooner but would require they join the glide slope from above.

The left radio altimeter suddenly indicated an erroneous height of -8 feet during the approach and may have caused the landing gear warning horn to activate prematurely. The crew acknowledged the radio altimeter as the possible cause, but did not discuss any further ramifications of the malfunction. These types of radio altimeter system faults were common in the fleet. In fact, Turkish Airlines documented 235 such faults in the previous year, including 16 on the accident aircraft.

Once the aircraft captured the localizer and glide slope, with the left radio altimeter reading -8 feet, the autothrottle conditions to reduce for landing were met and the autothrottles brought both engines to idle thrust. The captain’s flight display annunciated “RETARD” and his flight director steering bars disappeared. They were nearly 2,000 feet in the air.

Because they were still descending steeply to join the glide slope and attempting to decelerate as they increased flap settings, the crew failed to notice that the throttles never increased from idle once they were on glide path and on speed. They had several further clues but failed to notice the abnormally high deck angle as the right autopilot trimmed the stabilizer to maintain glide slope and the idle thrust allowed the airspeed to fall 34 knots below target.

At 460 feet above the ground the stick shaker activated. It took the startled crew almost 25 seconds to fully commit to the stall recovery, but by then it was too late. The airplane impacted 1.5 kilometers short of the runway and broke into three pieces. Of the 135 souls on board, 9 were killed.

The crew never considered level reversion because the highest level of automation, the right autopilot’s capture of the localizer and glide slope, was working just fine. By the time the crew arrived at their “click, click” moment it was too late. If you were to consider the automation as not just a collection of hardware and software but as another pilot, you would say this particular pilot became distracted by a minor systems glitch and failed to fly the airplane. With that set of blame fixed, you could also say the other three pilots on that flight deck failed in their duties to monitor. We need to start thinking of our seemingly infallible automation as just another pilot that can make mistakes, just like the rest of us.

More about this accident: Turkish Airlines 1951.

Automation Resource Management

Our avionics have come a long way since the days of the first wing leveler and the basic altitude hold function. The same cannot be said of our automation philosophy. Perhaps it is time to treat the autopilot with the same respect and wariness we provide to any other pilot. Perhaps it is time to extend some of our best Crew Resource Management (CRM) skills to the automation. Let’s call it Automation Resource Management (ARM).

We use CRM to continually support and monitor the other pilot. Why not ARM to continually support and monitor the flight automation systems? A very good first officer mentally flies the airplane and is ready to offer the captain everything from gentle hints (“A little left of course, boss”) to a slap in the face (“Pull up, now!”). But the CRM skill needed here isn’t what you would find between captain and first officer. While the automation is usually very good, sometimes it can be a little “thick.”

Let’s call this thick automation pilot “Otto.” If you think of yourself as a training captain and “Otto” as a newly hired first officer, you will have the right mindset. Think of Otto as a “noob,” he is very capable but doesn’t always understand instructions as they are meant. Like any other “noob,” Otto can handle specifically assigned tasks but even with these he needs to be watched. When pilots assume this role with the electrons, a five-step process is in order: decide, predict, execute, confirm, and adjust.

- Decide — For every action, decide ahead of time how the action should be accomplished. If, for example, you are assigning Otto the task of climbing from 5,000 to 6,000 feet, decide how that is best accomplished. For your aircraft this might be by entering the next altitude in the altitude select window, pressing the vertical speed button, and dialing a rate of 1,000 feet per minute.

- Predict — Before actually turning Otto loose, predict what the outcome will be. In our example, we expect the following steps: the next altitude to show up in the pilot’s flight display, the vertical speed button to illuminate, the altitude hold indicator to extinguish, the vertical speed mode annunciated, the flight director to increase in pitch, the autothrottles to increase slightly, the airplane to climb at 1,000 feet per minute, the next altitude to capture about 100 feet prior to level off, the vertical climb annunciator to extinguish, the aircraft to level off, the altitude hold annunciator to illuminate, and the autothrottles to reduce slightly.

- Execute — When activating Otto, it can be helpful to involve more than just the tactile sensation of pressing the button or dialing in the desired setting. If we verbally announce the action (“Vertical speed, one-thousand”) and point to the expected result (such as the pilot’s flight display annunciator) we involve aural and visual senses to help confirm the action. This also helps the other pilot play his or her CRM/ARM role. For more about this technique, see: Pointing and Calling.

- Confirm — Ensure the predicted outcome happens. This confirmation needs to encompass more than just the specific task but also any unintended consequences. For example, in our climb instruction, did the act of setting a new altitude and the vertical speed mode impact any previously entered vertical navigation command? Did our climb instruction take us off a “climb via” standard departure?

- Adjust — If Otto fails to react as anticipated, give him a simpler way to accomplish the same objective. If that too fails, further simplify the task and be prepared to take control completely. If our 1,000-foot altitude climb results in a correct pitch response but full climb thrust and rapidly increasing airspeed, it may be time to examine the command speed target. Perhaps the flight management system’s speed profile was in error. You may need to overrule the autothrottles but permit Otto to continue the level off.

There is no doubt that many of our current generation airplanes have flight management systems that make real time decisions at an accuracy rate better than any pilot. But these systems can also make mistakes at a faster rate and it is up to the human being to catch these mistakes before they become tragic. The “click, click” response can leave the pilot with not only the task of figuring out what to do next, but having to do this while suddenly manipulating a handful of airplane.

The modern approach to this problem has been to ratchet down the automation level so the pilot can gradually assume increasing levels of control; this should minimize the “startle response.” But automation failures rarely pop up as cut and dried failures. As the complexity of the automated systems increase, error detection and troubleshooting become increasingly difficult. Merely removing the highest level of automation may not cure what ails us.

However, if we start to think of the automated systems as a fellow pilot, albeit a novice pilot, we can start to better manage the automation. We know this novice pilot is very good at executing a task when given very precise instructions; but not so good at reading between the lines and certainly incapable of reading our minds. If we approach every automation task by thinking of ourselves as flight instructors and the electrons as students, we will be much better prepared for the times when the automation suddenly misbehaves. Then we can start to embrace a new philosophy of managing the novice pilot: Automation Resource Management.

4

Humble pie

Of the 14 technology screw ups listed on this page, 8 are screw ups of my own. Do you think less of me as a result? I hope not. I hope you realize that I am bearing all so that others can learn from the mistakes I have made. There is another reason. By reliving each mistake, analyzing them, and preaching the solutions, I solidify the lessons learned in my own mind. It makes me a better pilot. But there is yet another benefit. As the "boss pilot" in my flight department, I am letting the other pilots know that it is okay to point out my errors and therefore their own. You might think pilots shouldn't admit their flaws and that leaders certainly shouldn't. But give it some more thought, please. I think it is the secret to safer flight operations. Especially in a high tech environment.

References

(Source material)

Dutch Safety Board, "Crashed during approach, Boeing 737-800, near Amsterdam Schiphol Airport, 25 February 2009," The Hague, May 2010 (project number M2009LV0225_01)