The patron saint of reliability engineering is Captain Edward Murphy, though he may or may not deserve credit or praise. He designed G-force gauges for use in test rocket sleds and centrifuges. When these were needed for a rocket sled making deceleration tests, his unclear instructions led to faulty installation and lost test data. Some say he passed the blame onto his subordinates, while he was later interviewed and accepted the blame. The people running the program rephrased one of Murphy's explanations as "If it can happen, it will happen." Regardless of who is right, "Murphy's Law" has become known worldwide. For pilots, the phrase that has become known as "Murphy's Law" can help when looking for redundancy in systems and procedures, a way to come up with a "Plan B" before a Plan B is needed.

— James Albright

Updated:

2018-06-20

Most of us know Murphy's Law as stated this way: "What can go wrong will go wrong." The people most credibly credited for coming up with the law state it this way: "If it can happen, it will happen." For many of us, the difference is academic and the saying itself is merely an excuse to explain why things sometimes go wrong.

As pilots, we can learn from the History of Murphy's Law and how it relates to reliability engineering. That way we can come up with our own corollary: "If something unsafe can happen, it is up to us to predict it so we can prevent it."

1 — The history behind Murphy's Law

"If something unsafe can happen, it is up to us to predict it so we can prevent it."

1

The history behind Murphy's Law

All of this comes from A History of Murphy's Law by Nick T. Spark. It offers a very good case study of how the law came into being and that, in itself, offers hints on how to avoid falling prey to the law, "whatever can go wrong, will go wrong." Sadly, the book is out of print. I've quoted from it liberally.

There appears to be a dispute between advocates of Colonel John Stapp and Captain Edward Murphy on who really invented the law attributed to Murphy. Between the two, I think Stapp a more likely candidate and have chosen to tell the story mostly crediting him.

It all started in 1947 at Muroc Air Force Base, which was later renamed Edwards Air Force Base. A top secret, "Project MX981," was commissioned. Author Nick Spark interviewed David Hill, Sr., a member of the secret test team . . .

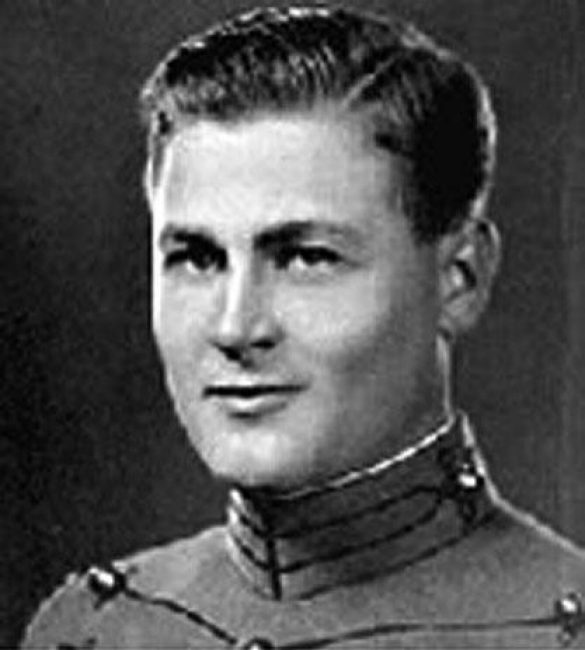

Colonel John P. Stapp

Hill recalls events with startling clarity. Back in 1947, he'd accepted a job at Northrop and been dispatched to Muroc (later renamed Edwards AFB) to work on the top secret Project MX981. These tests were run by Captain Stapp. Stapp, Hill says with emphasis, wasn't just an Air Force officer. He was a medical doctor, a top-notch researcher, and a bit of a Renaissance man.

The goal of MX981 was to study "human deceleration". Simply put, the Air Force wanted to find out how many G's - a 'G' is the force of gravity acting on a body at sea level - a pilot could withstand in a crash and survive. For many years Hill explains, it had been believed that this limit was 18 Gs. Every military aircraft design was predicated on that statistic, yet certain incidents during WWII suggested it might be incorrect. If it was, then pilots were needlessly being put at risk.

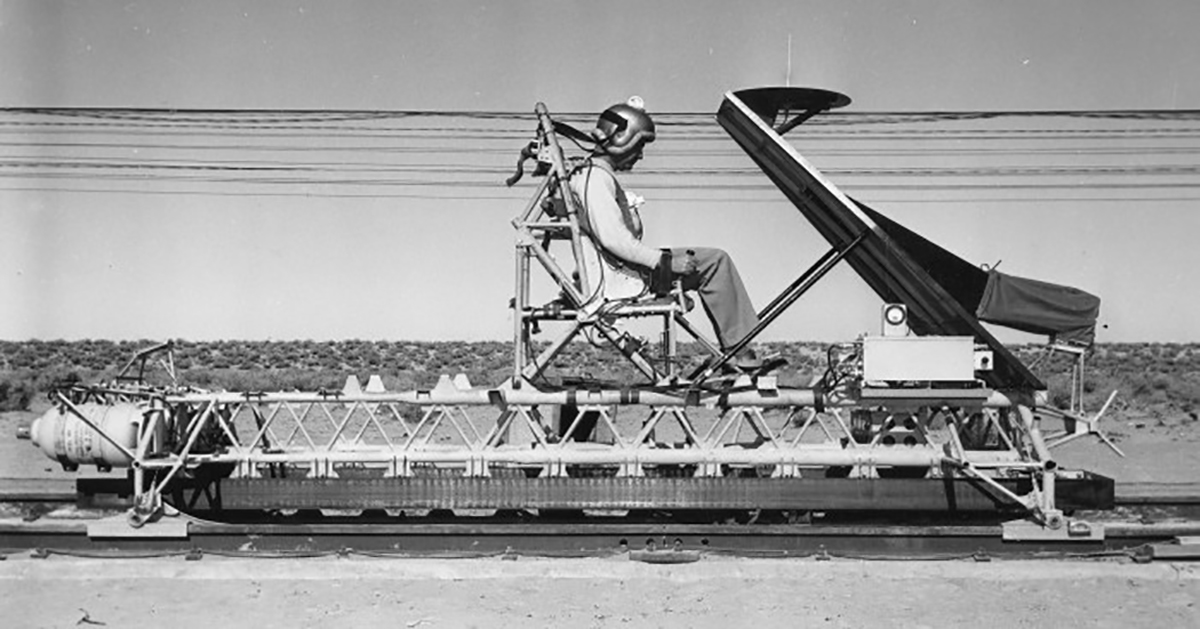

To obtain the required data, the Aero Medical Lab at Wright Field contracted with Northrop to build a decelerator. "It was a track, just standard railroad rail set in concrete, about a half-mile long," says David Hill, pointing to one of the photos. "It had been built originally to test launch German V-1 rockets - buzz bombs - during WWII." At one end of the track engineers installed a long series of hydraulic clamps that resembled dinosaur teeth. The sled, nicknamed the "Gee Whiz", would hurtle down the track and arrive at the teeth at near maximum velocity, upwards of 200 mph. Exerting millions of pounds of force, the clamps would seize the sled and brake it to a stop in less than a second. In that heart-stopping moment, the physical forces at work would be the equivalent of those encountered in an airplane crash.

The brass assigned a 185-pound, absolutely fearless, incredibly tough, and altogether brainless anthropomorphic dummy named Oscar Eightball to ride the Gee Whiz. Stapp, David Hill remembers, had other ideas. On his first day on site he announced that he intended to ride the sled so that he could experience the effects of deceleration first-hand. That was a pronouncement that Hill and everyone else found shocking. "We had a lot of experts come out and look at our situation," he remembers. "And there was a person from MIT who said, if anyone gets 18Gs, they will break every bone in their body. That was kind of scary."

But the young doctor had his own theories about the tests and how they ought to be run, and his nearest direct superiors were over 1000 miles away. Stapp had done his own series of calculations, using a slide rule and his knowledge of both physics and human anatomy, and concluded that the 18 G limit was sheer nonsense. The true figure, he felt, might be twice that if not more. It might sound like heresy, but just a few months earlier someone else had proved all the experts wrong. Chuck Yeager, flying in the Bell X-1, broke the sound barrier in the same sky that sheltered the Gee Whiz track. Not only did he not turn to tapioca pudding or lose his ability to speak, as some had predicted, but he'd done it with nary a hitch. "The real barrier wasn't in the sky," he would say. "But in our knowledge and experience."

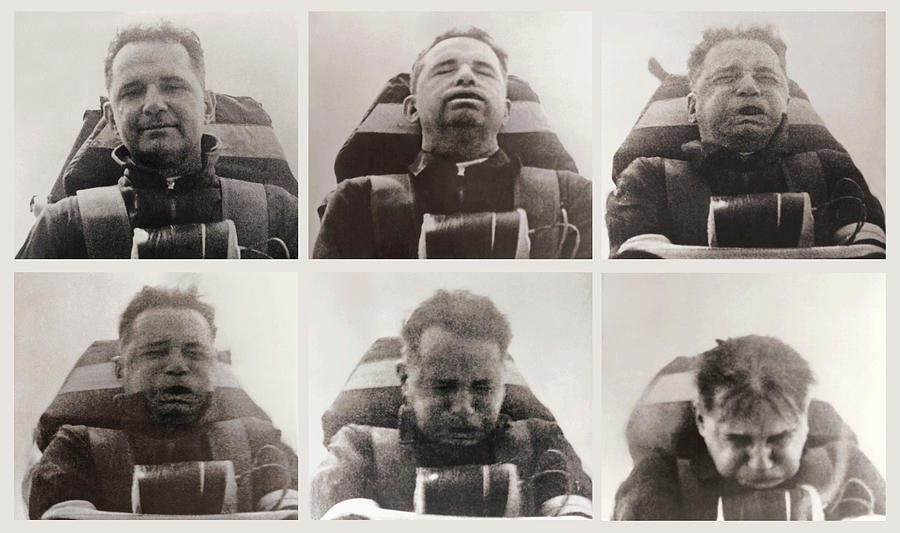

Finally, in December 1947, after 35 test runs with dummies and chimpanzee test subjects, Stapp got strapped into the steel chariot and took a ride himself. Only one JATO rocket bottle was used, and the brakes produced a mere 10 Gs of force. Stapp called the experience "exhilarating." Slowly, patiently, he increased the number of rockets and the stopping power of the brakes. The danger level grew with each test, but Stapp was resolute, Hill recalls, even after suffering some painful injuries. Within a few months, Stapp had not only subjected himself to 18 Gs, but to nearly 35. That was a stunning figure, one which everyone on the test team recognized would forever change the design of airplanes and pilot restraints.

Which brings up the famous incident. At one point an Air Force engineer named Captain Murphy visited Edwards. He brought four sensors, called strain gauges, which were intended to improve the accuracy of the G-force measurements. The way Hill tells it, one of his two assistants, either Ralph DeMarco or Jerry Hollabaugh, installed the gauges on the Gee Whiz's harness.

Later Stapp made a sledrun with the new sensors and they failed to work. It turned out that the strain gauges had been accidentally put in backwards, producing a zero reading. "If you take these two over here and add them together," Hill explains, "You get the correct amount of G-forces. But if you take these two and mount them together, one cancels the other out and you get zero."

Murphy soon left, but his sour comment made the rounds at the sled track. "When something goes wrong," Hill says, "The message is distributed to everyone in the program." The way the fat got chewed, Murphy's words — "if there's any way they can do it wrong, they will" — were apparently transformed into a finer, more demonstrative "if anything can go wrong, it will." A legend had been hatched. But not yet born.

Just how did the Law get out into the world? Well, according to David Hill, John Paul Stapp held his first-ever press conference at Edwards a few weeks after the incident. As he was attempting to explain his research to a group of astonished reporters, someone asked the obvious question, "How is it that no one has been severely injured - or worse - during these tests?" Stapp, who Hill remembers as being something of a showman, replied nonchalantly that "we do all of our work in consideration of Murphy's Law." When the puzzled reporters asked for a clarification Stapp defined the Law and stated, as Hill puts it, "the idea that you had to think through all possibilities before doing a test" so as to avoid disaster.

It was a defining moment. Whether Stapp realized it or not, Murphy's Law neatly summed up the point of his experiments. They were, after all, dedicated to trying to find ways to prevent bad things - aircraft accidents - from becoming worse. As in fatal. But there was a more significant meaning that touched the very core of the life mission of an engineer. From day one there had been an unacknowledged but standard experimental protocol. The test team constantly challenged each other to think up "what ifs" and to recognize the potential causes of disaster. If you could predict all the possible things that can go wrong, the thinking went, you could figure out a way to prevent catastrophe. And save John Stapp's neck.

If anything can go wrong, it will.

Source: Spark, pp. 10 - 14

A 1954 newsreel about this: Fastest Man on Earth.

The author then interviewed George Nichols, a long time associate of then-Captain Stapp.

When the Gee Whiz tests were completed, Stapp convinced the Aero Medical Lab to build a much more sophisticated sled called the Sonic Wind at Holloman, New Mexico. On his 29th and what turned out to be final sled ride, Stapp reached a speed of 632 miles per hour - actually faster than a speeding bullet - and encountered 46.2 G's of force. In his pursuit of the knowledge of the physiological limits of the human body, Stapp hadn't just pushed the envelope, he'd mailed it to the post office.

632 miles per hour actually broke the land speed record, making Stapp the fastest man on earth. And 46.2 G's was the most any human being had ever willingly experienced. Prior to the test Nichols had real doubts about whether it was really survivable. It turned out it was, although Stapp paid a severe penalty. When the Sonic Wind stopped, he suffered a complete red out. "His eyes had hemorrhaged and were completely filled with blood," Nichols remembers, his voice cracking. "It was horrible. Absolutely horrible." Fortunately, there was little permanent damage, and a day later the visionary could see again more or less normally. He'd have a trace image in his field of vision for the rest of his life.

Which leads us to Murphy's Law. The reason most people get it wrong, Nichols indicates, is that they don't know how it was originally stated or what it meant. "It's supposed to be, 'If it can happen, it will'," says Nichols, "Not 'whatever can go wrong, will go wrong."' The difference is a subtle one, yet the meaning is clear. One is a positive statement, indicating a belief that if one can predict the bad things that might happen, steps can be taken so that they can be avoided. The other presents a much more somber, some might say fatalistic, view of reality.

Source: Spark, pp. 21 - 23

That Murphy Fellow

How did the Law come into being? Nichols relates a story similar to Hill's, only more detailed. Captain Edward A. Murphy, he says, was a West Point-trained engineer who worked at the Wright Air Development Center. "That's a totally separate facility from the Aero Med Lab," he emphasizes. "He had nothing to do with our research." Nevertheless, Murphy one day appeared at the Gee Whiz track. With him the interloper brought the strain gauge transducers that Hill described. "A transducer," Nichols says, seeing my blank look of confusion, "Is a measuring device. And these particular transducers were actually designed by Murphy."

I begin to understand the reason for Murphy's visit. His strain gauges represented a potential solution to a problem with the Gee Whiz's G-force instrumentation. Questions had been raised about the accuracy of the data obtained from sled-mounted accelerometers. What Murphy hoped to do was to actually use the test subject, be it a dummy, chimpanzee or human being, to help obtain better data. The subject always wore a restraint system consisting of a heavy harness equipped with two tightening clamps. Murphy hoped to place strain gauge bridges in two positions on each clamp. When the sled came to a stop, the bending stress placed on the clamps would be measured, and from that a highly accurate measure of G-force could be produced.

When he showed up, Murphy got Stapp's full attention. He asked if Nichols would install his transducers immediately. Not that he didn't enjoy the sunshine out at Edwards, but he wanted to return to Wright Field the next morning. "And I said, well, we really ought to calibrate them," remembers Nichols. "But Stapp said, 'No, let's take a chance. I want to see how they work.' So I said okay, we'll put them on. So, we put the straps on and took a chance on what we thought the sensitivity was."

A few hours later a test was run with a chimp, and to Nichols' surprise the chart produced by Murphy's strain gauges showed no deflection at all. "It was just a steady line like it was at zero," Nichols comments. Even if they'd been calibrated wrong, the transducers should have registered something. "And we guessed," Nichols continues, "That there was a problem with the way the strain gauges were wired up."

An examination revealed that there were two ways the strain gauge bridges could have been assembled. If wired one way - the correct way - they would measure bending stress. In the other direction they would still function, but the bending stress reading would effectively be canceled out. In its place would be a measure of the strap tension, which in the case of determining G force load was useless. "David Hill and Ralph DeMarco checked the wiring," Nichols continues, "and sure enough that's how they'd wired the bridges up." Backwards.

But unlike David Hill, Nichols insists that the error with the gauges had nothing to do with DeMarco, Hollabaugh, or anyone else on the Northrop team. By "they" Hill meant Capt Murphy and his assistants at Wright Field - who had assembled the devices.

The way Nichols tells it, the gauges hadn't been installed wrong — they'd actually been delivered as defective merchandise. Nichols initial thought was that Murphy had probably designed the gauges incorrectly. But there was another possibility: that he'd drawn his schematic in a way that it was unclear, causing his assistant to accidentally wire them backwards. If that's what happened, and Nichols reasoned it was likely, then the assistant had truly encountered some bad luck. He'd had a 50% chance of wiring each gauge correctly or incorrectly, yet he'd managed to wire all four wrong. He'd defied the odds or perhaps, in some respect, he'd defined them . . . Either way, Nichols figured, Murphy was at fault because he obviously hadn't tested the gauges prior to flying out to Edwards. That ticked George off because setting up and running a test was both time-consuming, expensive, and nerve-wracking.

"When Murphy came out in the morning and we told him what happened," remembers Nichols, "He was unhappy." But much to Nichols' surprise, Murphy didn't for a moment consider his part in the error - drawing an unclear schematic. Instead, he almost spontaneously blamed the error on his nameless assistant at Wright. "If that guy has any way of making a mistake," Murphy exclaimed with disgust, "He will."

According to Nichols, the failure was only a momentary setback - "the strap information wasn't that important anyway," he says - and, regardless, good data from the test had been collected from the other instruments. The Northrop team rewired the gauges, calibrated them, and did another test. This time Murphy's transducers worked perfectly, producing highly reliable data. From that point forward in fact, Nichols notes, "we used them straight on" because they were such a good addition to the telemetry package. But Murphy wasn't around to witness his devices' success. He'd returned to Wright Field and never visited the Gee Whiz track ever again.

Long after he'd departed however, Murphy's comment about the mistake hung in the air like a lonely cloud over the Rogers dry lake. Part of the reason was that no one was particularly happy with Murphy, least of all Nichols. The more he thought about the incident, the more it bothered him. He became all but convinced that Murphy, and not his assistant, was at fault. Murphy had "committed several Cardinal sins" with respect to reliability engineering. He hadn't verified that the gauges had been assembled correctly prior to leaving Wright Field, he hadn't bothered to test them, and he hadn't given Nichols any time to calibrate them. "If he had done any of those things," Nichols notes dryly, "He would have avoided the fiasco."

As it was, Murphy's silly, maybe even slightly asinine comment made the rounds. "He really ticked off some team members by blaming the whole thing on his underling," Nichols says. "And we got to thinking as a group. You know? We've got a Murphy's Law in that. Then we started talking about what it should be. His statement was too long, and it really didn't fit into a Law. So we tried many different things and we finally came up with, 'If it can happen, it will happen."'

So Murphy's Law was created, more or less spontaneously, by the entire Northrop test team under the supervision of Nichols. In one sense, it represented a bit of sweet revenge exacted on Ed Murphy. But George Nichols rapidly recognized that it was far more than that. Murphy's Law was a wonderful pet phrase, an amusing quip that contained a universal truth. It proved a handy touchstone for Nichols' day-to-day work as a project manager. "If it can happen, it will happen," he says. "So you've got to go through and ask yourself, if this part fails, does this system still work, does it still do the function it is supposed to do? What are the single points of failure? Murphy's Law established the drive to put redundancy in. And that's the heart of reliability engineering."

Source: Spark, pp. 24 - 27

2

Reliability engineering

I often joke that we Air Force pilots are issued an oversized ego on day one in pilot training, just before we get our first nomex flight suit. I think the same can be said of engineers. I've quoted from an engineer who brings them down a notch or two, as evidenced by the title of his excellent book: To Engineer is Human. I use the quotes as capstones to thoughts on the engineering side of being a pilot. I think we can all learn from the author's engineering lessons.

Learn from mistakes

No one wants to learn by mistakes, but we cannot learn enough from successes to go beyond the state of the art. Contrary to their popular characterization as intellectual conservatives, engineers are really among the avant-garde. They are constantly seeking to employ new concepts to reduce the weight and thus the cost of their structures, and they are constantly striving to do more with less so the resulting structure represents an efficient use of materials. The engineer always believes he is trying something without error, but the truth of the matter is that each new structure can be a new trial. In the meantime the layman, whose spokesman is often the poet or the writer, can be threatened by both the failures and the successes. Such is the nature not only of science and engineering, but of all human endeavors.

Source: Petroski, p. 62

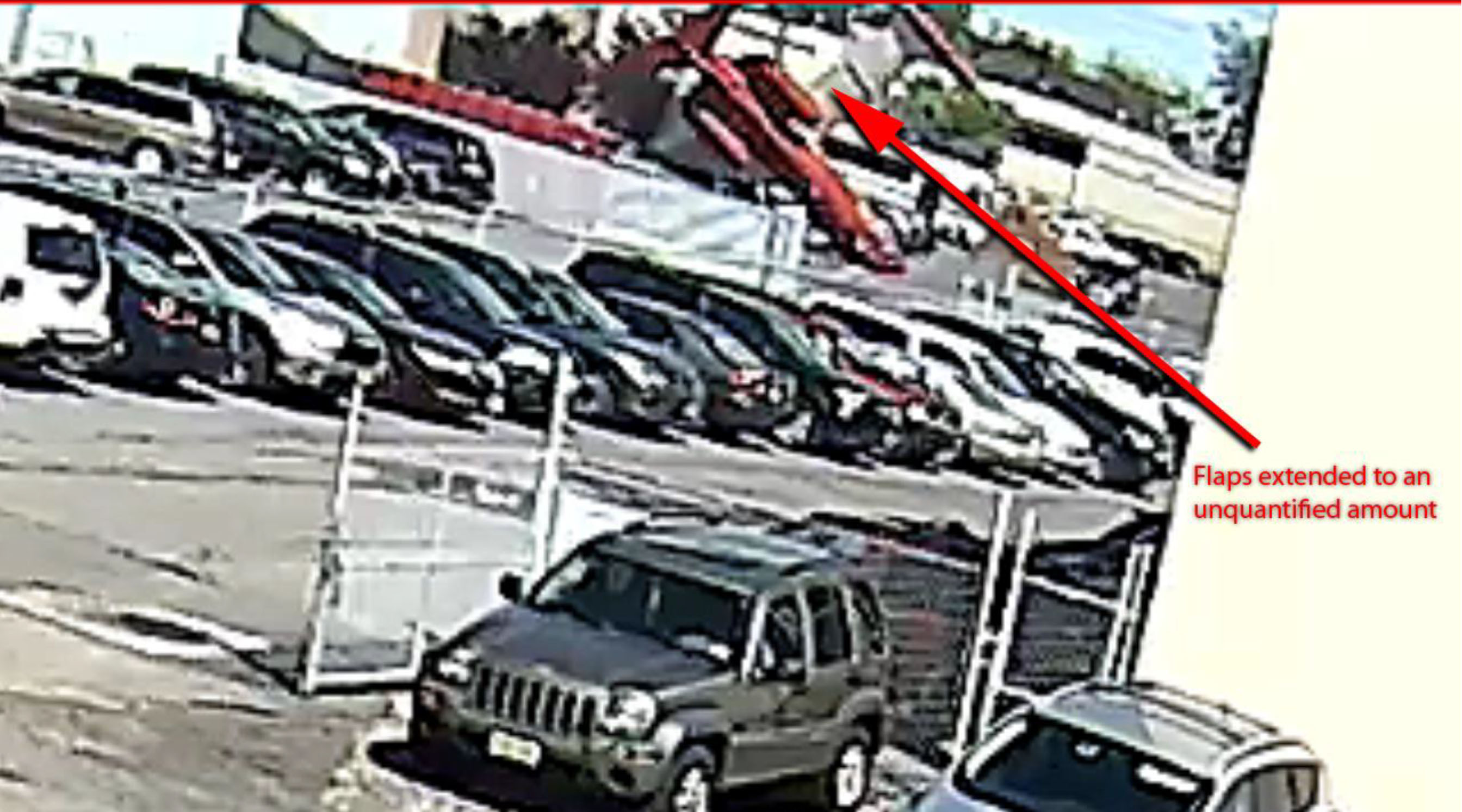

Security video shot at 770 Commercial Avenue, Accident Docket N452DA, Security Video Recordings, Figure 4.

The two pilots never figured out the circle-to-land maneuver in the box. That only made things worse in the airplane.

See: Case Study: LR35 N452DA for more about this accident.

We learn from our mistakes, no doubt about. This is true from our earliest days of learning to ride a bicycle, drive a car, and then to fly an airplane. But each step along the way increased the price of mistakes, both in dollars and injury. With complex, modern aircraft, we are afforded the luxury of full motion simulators that provide the lessons without the costs. We need to fully embrace that.

The problem, as any graduate of a type rating ride in a simulator will tell you, is the training is accompanied by an evaluation that has a direct consequence on our pay checks. We often try to minimize the pain by making things as easy as possible. Departing the paved surface on lesson one, for example, can be laughed off as day one nerves. Departing controlled flight on lesson two, for another example, may just be us still getting the feel for the machine. I've had my moments over the years, to be sure. In one example, I sat in the seat for a few minutes, unable to talk. I am told I took it too seriously. Perhaps. But they tell us to treat the box as if it were the aircraft. If you make a mistake in the box you wouldn't forgive in the aircraft, you need to really figure out what happened, why, and learn how to prevent it from happening in the future.

Check your work, be willing to have your work checked, learn to check the work of others

Engineers today, like Galileo three and a half centuries ago, are not superhuman. They make mistakes in their assumptions, in their calculations, in their conclusions. That they make mistakes is forgivable; that they catch them is imperative. Thus it is the essence of modem engineering not only to be able to check one's own work, but also to have one's work checked and to be able to check the work of others. In order for this to be done, the work must follow certain conventions, conform to certain standards, and be an understandable piece of technical communication. Since design is a leap of the imagination, it is engineering analysis that must be the lingua franca of the profession and the engineering-scientific method that must be the arbiter of different conclusions drawn from analysis. And there will be differences, for as problems come to involve more complex parts than cantilever or even simply supported beams, the interrelations of those various parts not only in reality but also in the abstract of analysis become less and less intuitive. It is not so easy to get a feel for a mammoth structure like a jumbo jet or a suspension bridge by flexing a paint store yardstick in one's hands. And the hypothesis that a structure will fly safely through wind and rain can be worth millions of dollars and hundreds of lives.

Source: Petroski, p. 52

N121JM Wreckage, aerial photograph, from Accident Docket N121JM, figure 6.

The two pilots had an environment where checklists went unspoken and deviances had become, in a word, normal.

See: Case Study: Gulfstream GIV N121JM for more about this accident.

In a civil society it is considered impolite to comment negatively about another person's knowledge, skill, or demeanor. In many parts of military aviation and some parts of the airline world, this politeness has given way to brutal, in-your-face harassment. In the genteel world of corporate aviation, however, civil society reigns supreme and nary a negative word is ever given in most cockpits. We've got it all wrong. Mistakes are inevitable in any complex endeavor, aviation included. We should always be checking our own work, obviously. But we should encourage others — the Pilot Monitoring, for example — to do the same.

But how do you break the ice on a positive and productive critique session? You may want a critique but your fellow pilot may not want to risk your anger. Try this: critique yourself and ask the other pilot for suggestions on how to improve. React positively to anything offered. If the shoe is on the other foot, offer polite and respectful suggestions. A friend of mine used to say, "I've done worse and bragged about it." That might not be the right answer, but it certainly encourages a friendly and open exchange of ideas and techniques.

Learn about what not to do, as well as what to do

Although the design engineer does learn from experience, each truly new design necessarily involves an element of uncertainty. The engineer will always know more what not to do than what to do. In this way the designer's job is one of prescience as much as one of experience. Engineers increase their ability to predict the behavior of their untried designs by understanding the engineering successes and failures of history. The failures are especially instructive because they give clues to what has and can go wrong with the next design-they provide counterexamples.

Source: Petroski, p. 105

Aircraft Damage, from Transportation Safety Board of Canada, Aviation Investigation Report, Photo 4.

The two pilots got away with aiming for the first inch of runway in their Challenger 604 which has a very low deck angle on approach. This didn't end well in their brand new Global Express, which has a more conventional approach deck angle.

See: Case Study: Bombardier BD-700 C-GXPR for more about this accident.

Whether we realize it or not, many of our procedures and techniques are geared towards not doing something stupid or risky, as opposed to doing something wise and "unrisky." I learned this early on as a copilot in the KC-135A, an aircraft that had an abysmal safety record until the Air Force got fed up with losing so many of them. (Nearly two every year between 1958 and 1999.) We were told every warning in the flight manual was written in blood.

The KC-135's safety record is much better now, as are the safety records of most transport category aircraft these days. There is very little new to learn, we just have to avoid forgetting the lessons of the past. "Don't duck under," seems an obvious one. But we keep doing it and the accident records at the NTSB prove that fact.

Learn to analyze failure

Thus sometimes mistakes occur. Then it is failure analysis-the discipline that seeks to reassemble the whole into something greater than the sum of its broken parts-that provides the engineer with caveats for future designs. Ironically, structural failure and not success improves the safety of later generations of a design. It is difficult to say whether a century-old bridge was overdesigned or how much lighter the frames of forty-year-old buses could have been.

Source: Petroski, p. 121

TransAsia GE235 Loss of control and initial impact sequence, (Accident Occurrence Report, Figure 1.1-2)

See: Case Study: TransAsia GE235 for more about this accident.

I have been hearing that it is wise to learn from your mistakes from very early on, and that sentiment has continued for as long as I have been a pilot. But the engineer in me has always added to the thought: "It is wiser to learn from someone else's mistakes." When the cost of making a mistake in an airplane can be very high, we should learn to deeply analyze what went wrong. (So that we don't repeat the mistake.)

But we can be tempted to do this all wrong. If you read that the pilot on a recent accident shut down the wrong engine, you can be tempted to say, "I would never do that." That is like saying, "I'm better than this pitiful soul." That might be true. But consider that so many of the pilots in accident reports were quite good, maybe better than you (and me). But somehow they fell victim. I recommend you start reading accident reports with an open mind. A mind that says, "that could have been me." Want to give it a try? At last count, I have 152 for you to look at: Case Studies.

A few more case studies to consider

You know from experience that the news media often gets it wrong the day of an aviation accident, and quite often continues to pedal the wrong news even after the facts are out. But having the wrong facts pushed into your psyche can rob you of an important lesson or even teach you the wrong lesson. The more often you discover this, the more likely you will be to hold your fire until the facts are out. The skill will make you a better pilot. Here are a few case studies that might help in that process.

- Cutting Corners.

- Doomed From Preflight.

- Excellent Glider Pilot, Lousy Jet Airplane Pilot.

- High Technology Cockpit, Low Stick and Rudder Skills.

- Old School.

- Under Pressure.

The crew was doomed before they took off because the operator failed to adequately service the airplane. The crew could have caught the problem with a thorough preflight, but they failed to do so. With a few techniques, they could have detected the problem before it was too late. But none of this happened. The lesson here is, unfortunately, germane to many corporate operators: if you are flying for an organization that is prone to cutting corners, you should double your efforts to do everything by the book. See: Case Study: LR35 N47BA for more about this.

The crew failed to notice their static ports were covered with tape, but it was a Boeing 757 and the ports were too high to see, and it was at night. So the crew was basically doomed before they even took off. That is the instant conclusion you would make from reading a news report or even an accident synopsis. But the real lesson is that the accident was survivable had the pilots understood the basic relationship between thrust and pitch for most weights on their aircraft. They could have helped themselves by understanding where their transponder's altitude readout came from versus how the GPWS determined altitude. See: Case Study: AeroPeru 603 for more about this.

The crew was saddled with an airplane that was improperly maintained, causing a fuel leak halfway across the North Atlantic that turned their Airbus A330 into a glider. They managed to glide to a safe landing in the Azores and not a single passenger was injured. Ergo: the crew were immediately hailed as heroes. But had the crew understood the difference between a fuel imbalance and a fuel leak, they could have landed under power before they coasted out. See: Case Study: Air Transat 236 for more about this.

The pilots mismanaged a visual approach and ended up high and fast just a few miles out. A series of automation mistakes left the autothrottles in a "hold" condition that would not correct their low energy state. It is true that this feature of the automation was misunderstood by many pilots, but that doesn't relive the pilots of the need to keep the airplane on glide path, on speed, on a stabilized approach. See: Case Study: Asiana Airlines 214 for more about this.

The crew came from two different flight departments and the aircraft gave two different options for control of the nose wheel steering. Even years after the accident, most Gulfstream pilots will tell you that the accident was caused by that switch. But the real lesson applies to all pilots flying jets and is far more important. Buried in the report is the real cause: "The PIC had 17,086 total hours of which only 6,691 was in jets and 496 in the GIV. Interviews indicated that he "tended to unload the nose wheel on the GIV during takeoff to make it easier on the airplane on rough runways." Pilots flying large aircraft need to understand there is a minimum speed on the ground where the aircraft can be controlled with the nose gear in the air versus on the ground. You cannot rotate the nose with a crosswind before this speed. In some aircraft, like the GIV, rotation speed takes this into account. If you are flying an airplane like this, lifting the nose off the pavement before rotation speed can kill you. See: Case Study: Gulfstream GIV N23AC for more about this.

The crew made a long series of mistakes on the way to killing themselves and four of their six passengers. On the surface, it would seem the accident was caused by pilots who couldn't make a proper abort decision. But unless you dig deeper, you would have missed some very important lessons. You need to understand how well your tires hold pressure and become faithful about checking that tire pressure regularly. Had this flight department done that, the accident would not have occurred. You need to understand that if you are having directional control problems when using thrust reversers, your first reaction should be to cancel the reverse. Had these pilots done that, the accident could have been survived. See: Case Study: LR60 N999LJ for more about this.

3

Everyday examples

Technology often helps with these "If it can go wrong, it will" problems. Having a GPS overlay, for example, makes all sorts of raw data issues disappear. But not every aircraft has that capability and not every approach lends itself to the GPS. Sometimes it is an either/or proposition. When that happens, you need to structure your SOPs to trap Murphy's Law before it happens.

James,

Once again I enjoyed your article, this time with "Beating Murphy's Law". The area where you highlighted AF 447 was of particular interest to me. In the aftermath of this accident American Airlines has spent a fair amount of training time on what we term "Unreliable Airspeed". We have done a number of versions, i.e. climbing, descending and in high altitude cruise flight. The common safety point is , that once you suspect you have unreliable airspeed, is to designate who flys and who works the checklist and troubleshoots. The pilot flying (PF) then hand flys a fixed predetermined pitch and power setting (disconnecting auto throttles) to stabilize the situation. This prevents chasing unreliable instrument indications. You are absolutely correct in that pilots must know the physical limits of flight for the aircraft they are in. Any pitch above 10 degrees at FL 350 will get you into more trouble. They must also know and remember what protections are lost when flight control modes degrade in aircraft which are normally operated in the highest flight computer control mode. The normal protections may now be lost so the pilot needs to be the protector. Modern aircraft today are so reliable that it is easy to become complacent, but we must spend some time thinking, "What would I do if this bad thing (pick one) happens". It may save a lot of lives one day. Thanks again for your good stuff.

Captain Charlie Cox, B787 Intl., American Airlines, Dallas,Texas

Charlie,

That is very encouraging. I agree these techniques can save lives. I’ve long been a fan of the flying culture at American Airlines, ever since I first saw Warren Vanderberg’s presentation on Automation Dependency.

James

Greetings James,

I live in Africa and fly for a rather small missionary aviation organization. My aircraft is a pleasant, old Cessna 206.

I learned of your website through B&CA. That's an important publication in our hangar and I see it as a source of professional development; however, after looking at code7700 maybe I should spend more time reading what you've amassed. I just read about the self-critiquing done at Top Gun. In my world, flying without oversight for maybe months at a time, that is (or should be) a critical part of every flight I do.

I'm reaching out to you for an idea about something that I'm realizing that is vague and overlookable for all 5 of us fixed wing pilots - the small button on our GPS that switches the nav mode from GPS to localizer. On an older Garmin this small button lives on the bottom left corner and is one of 6 buttons in a row. It's an obscure button but it's that which determines what the source of information will be for the VOR head. Once I flew the most awesome VOR approach down to minimums. Everything seemed to just fall into place and the needle was solid from top to bottom. When I broke out at the MDA I found there was only a sea of jungle beneath me and no airport - that small black button was still on GPS mode. Thankfully that was a check flight and the check pilot had the self control to permit my error to reach it's final consequence.

I know that I'm not alone in making this mistake. My fellow pilots communicated that they too must be careful to push or not push that small button on our GPS. The problem is that nobody among us has a bullet proof way of making sure we get that done on each and every flight. I place that action at the end of the old GUMPS checklist but my modified version of that checklist has 3 S's at the end:

Gas Undercarriage Mixture Prop Seat belts Switches Source.

Not only is that a strange checklist but it also places the Source of my navigation information last in line.

One pilot will select the appropriate source for the IAP while he briefs the approach but this means that he may select his source 15 minutes before reaching the IAF. I see that as potentially problematic.

Is this is task which you must perform in your 450? And if so are you willing to share when you make that happen and what means do you have to make sure you don't forget it?

Thankful for any ideas that you can place on the table,

A BCA Reader

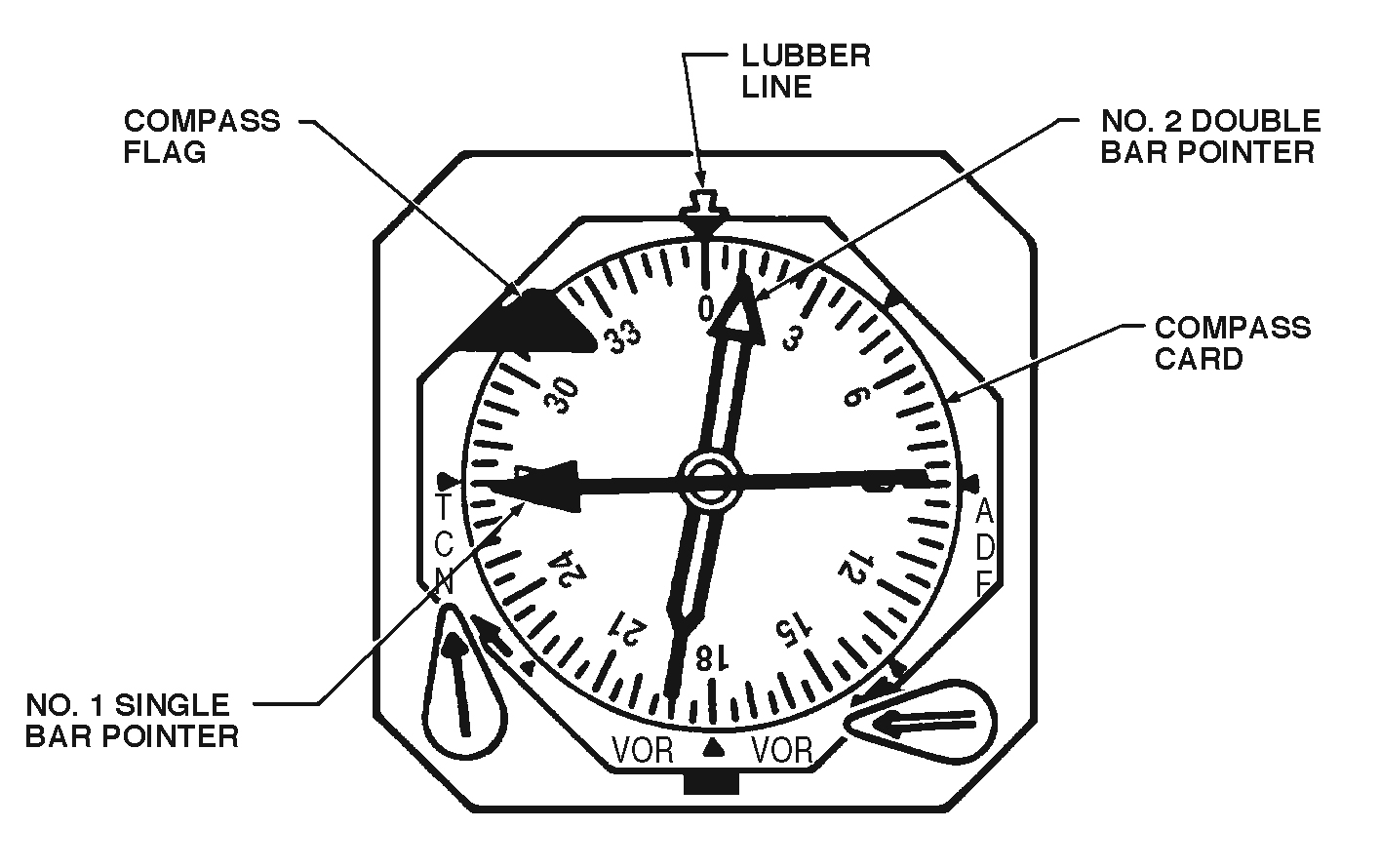

C-20B Radio Magnetic Indicators, 1C-20B-1, Figure 1-191

Dear Kind Reader,

Thank you for the very nice letter.

We have similar issues on just about every airplane I’ve ever flown, including the G450. As the computerization of any airplane goes up, the safeguards tend to get better. But it also becomes harder to detect by a simple glance when something is amiss.

In the GIII, for example, there is a paddle that switches the RMI from ADF to VOR. I’ve attached a diagram. In the Air Force we called this a “Q-3” paddle because our check rides had three possible results. A Q-1 was a pass. A Q-2 was a pass, but more training was required. And a Q-3 was a fail. You could fly a perfect approach off the wrong source and end up busting a check ride. Of course that was preferable to flying into a mountain.

In the G450 the computers tend to take care of that and just about every approach is flown off the GPS except ones that are localizer based. We still are at risk for shooting the approach to the wrong ILS, but many other things would have to go wrong along the way. But still there is the risk.

I like your GUMPSSS check. But you are right, it is too late to be checking navigation sources. I’ve found it best to check everything about an approach before the approach is begun, during the approach briefing. Even flying single pilot, I like to speak each step aloud and I want to do that for the approach briefing too. You should be able to do this before the approach is begun on most approaches. But if you have to switch navigation sources during the approach, there is another opportunity to forget. Have you heard about the “Five T’s” technique at the final approach fix? You may find it helpful to remember them at every FAF.

- TWIST - Twist the heading bug.

- TURN - Turn to the new course.

- TUNE - Tune the new frequency or navaid (and ensure the right buttons are pressed)

- TALK - Talk on the radio to report inbound.

- TIME - Start timing.

Of course not all these steps are required for every approach but it can be useful to recite them all when flying a non-GPS approach. (See Pointing and Calling, also called "Shisa Kanko" for these kinds of techniques.) There was a GIII crash a few years ago: Case Study: Gulfstream GIII N85VT.

The crew flew an ILS off a localizer and mistook the fast/slow indicator to be the glide slope. They crashed, killing everyone on board. It could have been prevented had they simply set up the navigation aids before they started the approach.

I hope these techniques prove useful. If you don’t mind, I would like to reprint your letter on the website (with your name and all other identification removed) to help others with the same question.

James

References

(Source material)

Accident Docket, Gulfstream GIV N121JM, NTSB ERA14MA271

Accident Docket, Lear 35 N452DA, NTSB CEN17MA183

Aviation Occurrence Report, 4 February, 2015, TransAsia Airways Flight GE235, Loss of Control and Crashed into Keelung River, June 2016

Petroski, Henry, To Engineer is Human: The Role of Failure in Successful Design, Vintage Books, NY, 1992.

Spark, Nick T., A History of Murphy's Law, www.historyofmurphyslaw.com, 2006

Technical Order 1C-20B-1, C-20B Flight Manual, USAF Series, 1 November 2002

Transportation Safety Board of Canada, Aviation Investigation Report A07A0134, Touchdown Short of Runway, Jetport In., Bombardier BD-700-1A11 (Global 5000) C-GXPR, Fox Harbour Aerodrome, Nova Scotia, 11 November 2007