I am not a fan of most products of academia designed to improve on things we come up with in aviation to improve safety but there are exceptions. Threat Error Management can be really useful, but not in the way it appears in most textbooks.

— James Albright

Updated:

2019-10-06

This is my attempt to fix what is broken and make it really valuable for Crew Resource Management

1 — Latest generation of CRM: Threat and Error Management (TEM)

1

Latest generation of CRM: Threat and Error Management (TEM)

During one of my jobs as a check airman for a 14 CFR 135 management company, I tended to get the problem crews for evaluation. My instructions were to evaluate, train if possible, and recommend dismissal otherwise. So I got to see more of the worst than the best. I knew intuitively that the best crews plan for the worst case scenario, even when the worst case almost never happened. But I soon got to see crews who always hoped for the best and ignored any thoughts of what could go wrong. I came to realize these pilots didn't believe there were threats out there trying to kill them. They lacked threat awareness.

Since I had long ago started tuning out the alphabet soup that the academics were preaching in the latest generations of CRM, I missed out on early discussion about "Threat and Error Management." But, as it turns out, TEM was singing my song.

The sixth generation of CRM

- At the start of the 21st century, the sixth generation of CRM was formed, which introduced the Threat and Error Management (TEM) framework as a formalized approach for identifying sources of threats and preventing them from impacting safety at the earliest possible time.

- Threats can be any condition that makes a task more complicated, such as rain during ramp operations or fatigue during overnight maintenance. They can be external or internal. External threats are outside the aviation professional's control and could include weather, a late gate change, or not having the correct tool for a job. Internal threats are something that is within the worker's control, such as stress, time pressure, or loss of situational awareness.

- Errors come in the form of noncompliance, procedural, communication, proficiency, or operational decisions.

- To assess the Threat and Error Management aspects of a situation, aviation professionals should:

- Identify threats, errors, and error outcomes.

- Identify "Resolve and Resist" strategies and counter measures already in place.

- Recognize human factors aspects that affect behavior choices and decision making.

- Recommend solutions for changes that lead to a higher level of safety awareness.

Source: Cortés, Cusick, pp. 131-132

Now the academic speak might be clouding the good ideas here, so let's look at TEM in these steps, but translated into pilot speak:

Identify threats

- CRM skills provide a primary line of defense against the threats to safety that abound in the aviation system and against human error and its consequences. Today’s CRM training is based on accurate data about the strengths and weaknesses of an organization. Building on detailed knowledge of current safety issues, organizations can take appropriate proactive or remedial actions, which include topics in CRM. There are five critical sources of data, each of which illuminates a different aspect of flight operations. They are:

- Formal evaluations of performance in training and on the line;

- Incident reports;

- Surveys of flightcrew perceptions of safety and human factors;

- Flight Operations Quality Assurance (FOQA) programs using flight data recorders to provide information on parameters of flight. (It should be noted that FOQA data provide a reliable indication of what happens but not why things happen.); and

- Line Operations Safety Audits (LOSA).

As operators, we can add an item to this list with one more source of data: personal experience. In order to do that, however, we need to be honest with ourselves and our observations.

Source: Helmreich

Operationally, flightcrew error is defined as crew action or inaction that leads to deviation from crew or organizational intentions or expectations. Our definition classifies five types of error:

- Intentional noncompliance errors are conscious violations of SOPs or regulations.

- Procedural errors include slips, lapses, or mistakes in the execution of regulations or procedure. The intention is correct but the execution flawed;

- Communication errors occur when information is incorrectly transmitted or interpreted within the cockpit crew or between the cockpit crew and external sources such as ATC;

- Proficiency errors indicate a lack of knowledge or stick and rudder skill; and

- Operational decision errors are discretionary decisions not covered by regulation and procedure that unnecessarily increases risk.

Source: Helmreich

You can identify threats well before a flight using the many reports generated after previous flights and training events. But some threats do not show up explicitly in these reports and it will be up to you to spot traits that lead to procedural intentional non-compliance, a lack of proficiency, or poorly designed Standard Operating Procedures. You will also have to be on the look out for real time errors made operationally. In short, you need to pay attention if you hope to identify threats.

Identify procedures already in place

Three responses to crew error are identified:

- Trap – the error is detected and managed before it becomes consequential;

- Exacerbate – the error is detected but the crew’s action or inaction leads to a negative outcome;

- Fail to respond – the crew fails to react to the error either because it is undetected or ignored.

Source: Helmreich

You should already have in place technological, procedural, and technique-based answers to all known threats. TCAS and EGPWS, for example, are technological solutions. I often get questions about something an airplane is doing (or failing to do) that is answered in the AFM or AOM; don't ignore the obvious sources of solutions. Finally, there may be a great technique out there that someone else is using that you've never heard of. You should search the many online sources as well as users group. If you have a great technique that can prevent an accident, you should document it and take steps to spread the word.

Anticipate problems

- Resilience is the ability to recognize, absorb and adapt to disruptions that fall outside a system’s design base, where the design base incorporates all the soft and hard bits that went into putting the system together (e.g. equipment, people, training, procedures). Resilience is about enhancing people’s adaptive capacity so that they can counter unanticipated threats.

- Safety is not about what a system has, but about what a system does: it emerges from activities directed at recognizing and resisting, or adapting to, harmful influences. It requires crews to both recognize the emerging shortfall in their system’s existing expertise, and to develop subsequent strategies to deal with the problem.

- As for crewmembers, they should not be afraid to make mistakes. Rather, they should be afraid of not learning from the ones that they do make. Self-criticism (as expressed in e.g. debriefings) is strongly encouraged and expected of crew members in the learning role. Everybody can make mistakes, and they can generally be managed. Denial or defensive posturing instead squelches such learning, and in a sense allows the trainee to delegitimize mistake by turning it into something shameful that should be repudiated, or into something irrelevant that should be ignored. Denying that a technical failure has occurred is not only inconsistent with the idea that they are the inevitable by-product of training.

Source: Dekker

Resilient crews:

- . . . are able to take small losses in order to invest in larger margins (e.g. exiting the take-off queue to go for another dose of de-icing fluid).

- . . . realize that just because a past chain of events came out okay doesn't mean it will the next time.

- . . . keep a discussion about risk alive even when everything looks safe.

- . . . study accident reports of other aircraft, even if a dissimilar aircraft type or operation, realizing the lessons learned can be applicable.

- . . . realize information must be shared because each individual may only have a fraction of the total information needed.

- . . . are open to generating and accepting fresh perspectives on a problem.

Come up with a Plan B

We cannot prepare our crews for every possible situation.

Source: Dekker

Rather, when we train new crewmembers, we need to get confidence that they will be able to meet the problems that may come their way—even if we do not yet know exactly what those will be.

Most of our training puts a focus on technical skills.

Source: Dekker

These aim to build up an inventory of techniques for the operation of an aircraft, or—nowadays—a set of competencies to that end.

There will always be something that we have not prepared crews for.

Source: Dekker

This is because formal mechanisms of safety regulation and auditing (through e.g. design requirements, procedures, instructions, policies, training programs, line checks) will always somehow, somewhere fall short in foreseeing and meeting the shifting demands posed by a world of limited resources, uncertainty and multiple conflicting goals.

At some point, crews will have to improvise "outside the margins."

Source: Dekker

It is at these edges that the skills bred for meeting standard threats need transpositioning to counter threats not foreseen by anybody. The flight of United Airlines 232 is an extreme example. The DC-10 lost total hydraulic power as a result of a tail engine rupture, with debris ripping through all hydraulic lines that ran through the nearby tailplane in mid-flight. The crew figured out how to use differential power on the two remaining engines (slung under the wings, below the aircraft’s center of gravity) and steered the craft toward an attempted landing at Sioux City, Iowa, which a large number of passengers (and the crew) subsequently survived.

2

Threat and Error Management (TEM) reconstructed

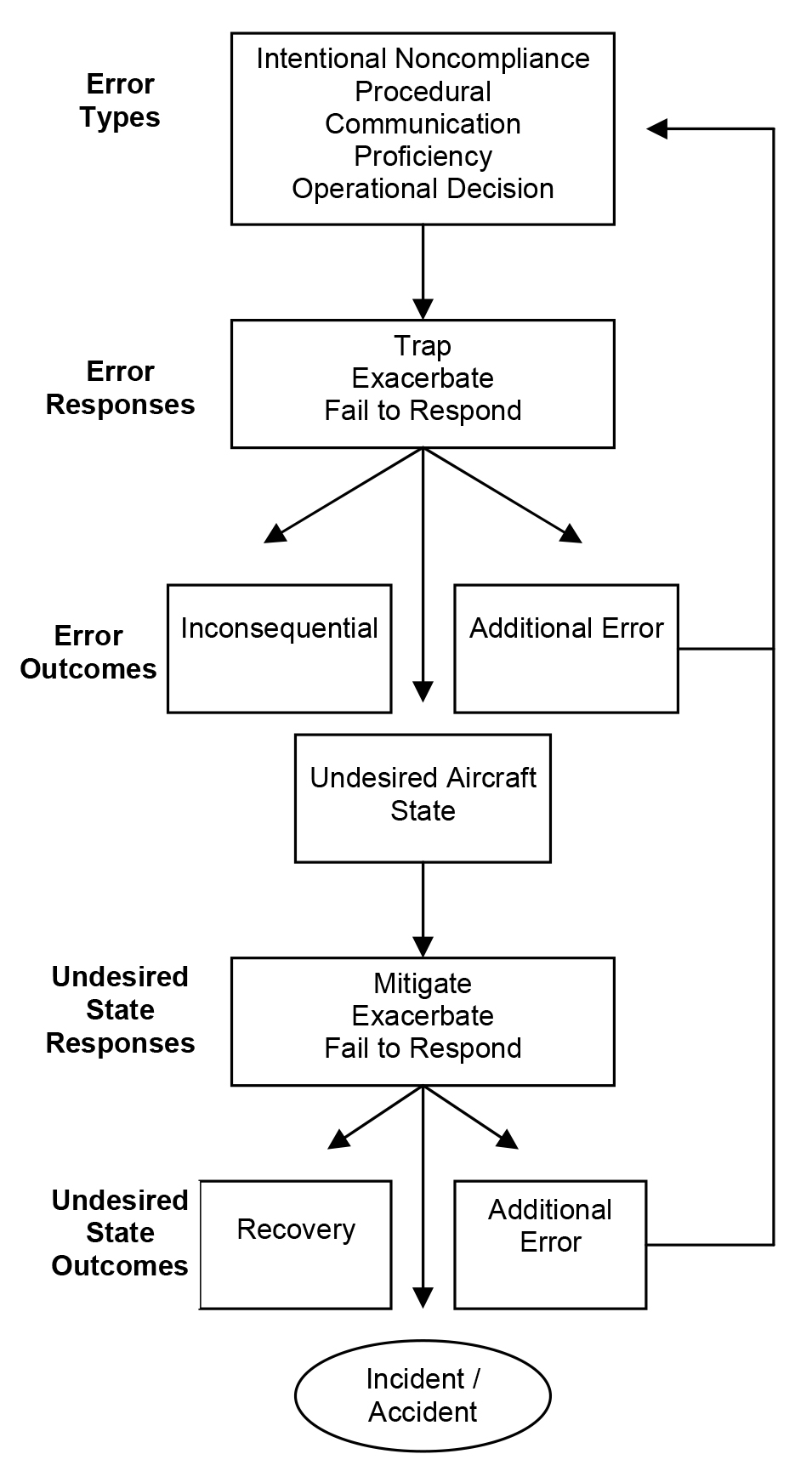

The Threat and Error Management (TEM) Model, as constructed by researchers at the University of Texas at Austin, is quite useful, but suffers in its complexity and language. Let's take a look at it and see what we can come up with that is more useful for pilots.

Error management model, from Helmreich, figure 2.

Error types

- Intentional non-compliance errors should signal the need for action since no organization can function safely with widespread disregard for its rules and procedures. One implication of violations is a culture of complacency and disregard for rules, which calls for strong leadership and positive role models. Another possibility is that procedures themselves are poorly designed and inappropriate, which signals the need for review and revision. More likely, both conditions prevail and require multiple solutions. One carrier participating in LOSA has addressed both with considerable success.

- Procedural errors may reflect inadequately designed SOPs or the failure to employ basic CRM behaviors such as monitoring and cross checking as countermeasures against error. The data themselves can help make the case for the value of CRM. Similarly, many communications errors can be traced to inadequate practice of CRM, for example in failing to share mental models or to verify information exchanged.

- Proficiency errors can indicate the need for more extensive training before pilots are released to the line. LOSA thus provides another checkpoint for the training department in calibrating its programs by showing issues that may not have generalized from the training setting to the line.

- Operational decision errors also signal inadequate CRM as crews may have failed to exchange and evaluate perceptions of threat in the operating environment. They may also be a result of the failure to revisit and review decisions made.

Source: Helmreich

Error Responses and outcomes

- The response by the crew to recognized external threat or error might be an error, leading to a cycle of error detection and response. In addition, crews themselves may err in the absence of any external precipitating factor. Again CRM behaviors stand as the last line of defense. If the defenses are successful, error is managed and there is recovery to a safe flight. If the defenses are breached, they may result in additional error or an accident or incident.

Source: Helmreich

Undesired State Responses

[Helmreich] Undesired states can be:

- Mitigated,

- exacerbated, or

- Fail to respond.

Undesired State Outcomes

There are three possible resolutions of the undesired aircraft state:

- Recovery is an outcome that indicates the risk has been eliminated;

- Additional error - the actions initiate a new cycle of error and management; and

- crew-based incident or accident.

Source: Helmreich

I am a big fan on the original Cockpit Resource Management concept, and maybe even some of the next iterations of Crew Resource Management. But then, after the academics put in their $0.02 I started to lose interest. But the University of Texas idea changed my view of the academicians, and I think their TEM model has promise. But I do have a few complaints:

- I'm not sure the differentiation between threats and errors is significant; I think the errors themselves are threats.

- The TEM process, as given, delays mitigation until after an "Undesired Aircraft State" (UAS), when in fact the mitigation can be a step to prevent the UAS in the first place.

- The model seems to ignore the learning process; you can improve future prospects by learning from the current threat or error.

I think we can take this model, refocus, and come up with something really useful . . .

3

Plan B

Successful crews think about the aircraft, the environment, and everything to do with the act of aviation, and try to envision what the threats to safe operations are while coming up with countermeasures to address these threats. These countermeasures become "Plan B" in case the threat occurs. Sometimes these crews have a Plan B, sometimes they have to create one on the fly. However the Plan B comes about, it needs to be evaluated after use and adjusted for the future.

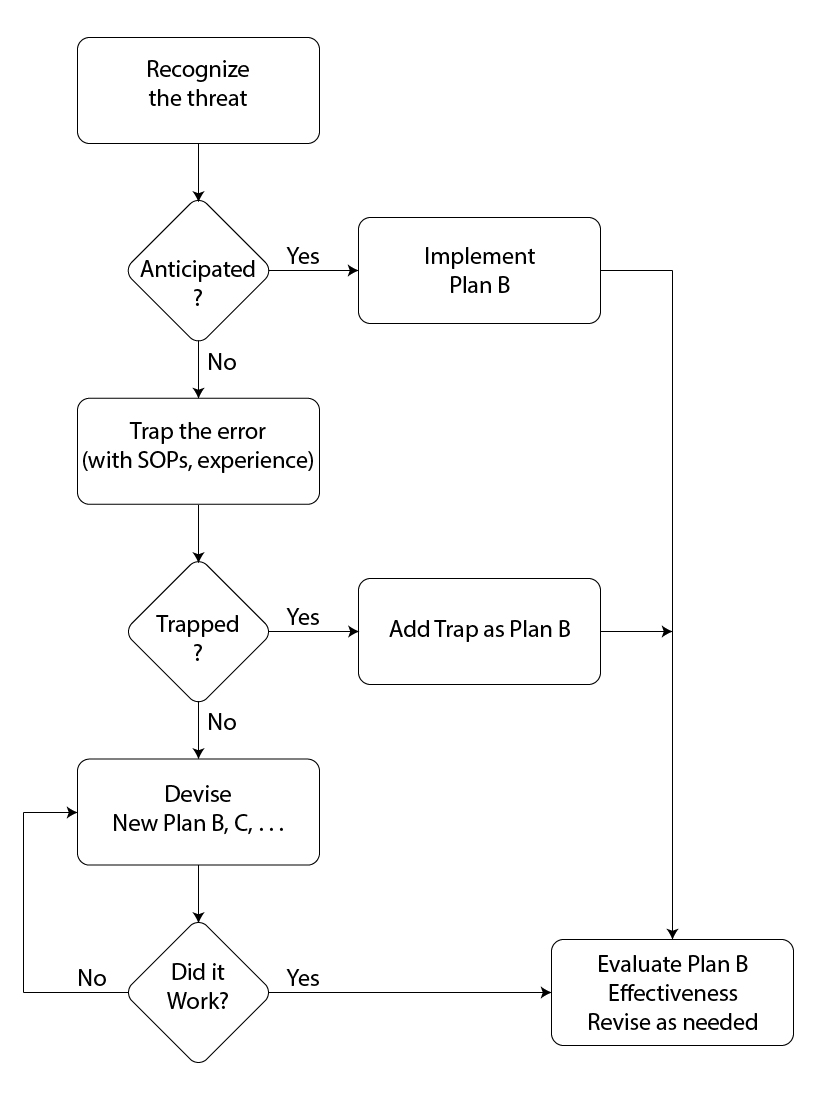

Plan B — the steps

- Recognize the threat — You need to pay attention here, but aircraft warning systems, air traffic control, Internet applications can all help. You can detect a threat days in advance; i.e., an advancing weather system. You can detect a threat while filing the flight plan; i.e., an EDCT. It can be a straight forward as a warning bell and CAS message. Or it can be as subtle as the other pilot nodding off. But you have to pay attention to recognize the threat for what it is.

- Leverage technology — We sometimes think about the latest technological upgrade as just a luxury item we can do without. But how luxurious is a modification that costs $100,000 that can potentially save a $10,000,000 aircraft? Synthetic vision can help you realize a circling approach cannot be continued. Infrared cameras can illuminate a runway obstruction your eyes cannot see. Predictive windshear detection systems can warn you about a windshear before it happens.

- Monitor the news — We tend to tune out things that aren't in our own spheres. Military? Airline? Corporate? Or perhaps what happens to a single pilot turboprop doesn't matter to a ultra long-range corporate jet. It all does matter; go beyond the headlines to find out.

- Focus your briefs on the threats — I recommend you rethink your canned takeoff briefing if it never changes, no matter the weather or runway length. I would also start over on your canned approach briefing if it covers such banalities as the date of the chart or the MSA in a non-mountainous area. But I wouldn't go so far as the Royal Aeronautical Society "Briefing Better" recommendation. Keep anything that can kill you if forgotten or misunderstood. Here are some examples of effective threat-focused briefings:

- Teterboro on a fine day: "This will be a flex takeoff on a long, dry runway, but our balanced field is within 2,000 feet of the runway length so any rejected takeoff near V1 will have to be with maximum reverse and braking. The departure procedure in the FMS agrees with the chart, so we will use LNAV for lateral guidance. The first altitude restriction comes immediately after takeoff at 1,500 feet and is a frequent spot for altitude busts, so we will use TOGA and a normal acceleration, but let's keep an eye on that level off altitude after takeoff."

- Aspen on a hot day: "This will be a obstacle limited takeoff, using runway analysis performance numbers. We have a high pressure altitude which will increase the true airspeed of all our performance numbers, making a rejected takeoff especially challenging. Our biggest threat comes from the mountains which, after an engine failure at V1, can only be cleared by following the procedure exactly and at V2 plus 10. The Aspen Seven procedure agrees exactly with the obstacle performance procedure, so if we lose an engine, we both need to focus on keeping the lateral needles centered and the speed on target.

- Today's approach into Bedford will be just a quarter of mile above visibility minimums. The temperature/dew point spread is just one degree so we might see the visibility go below minimums. We've already confirmed the FMS is set up for the ILS, including the missed approach. We need to confirm we get a good tune and identify before we begin the approach, we need to ensure the FMS sequences so we will get an automatic missed approach if needed. The DA is 382 feet, which gives us a 250 foot HAT, due to the hill on final approach. I will be using the EVS, but given the terrain I will not go lower than 250 feet unless I have the HIRLs in sight. The runway is reported wet so I will brake accordingly. If we don't have the runway in sight, I will press TOGA and climb immediately, retract the flaps to 20 degrees, retract the landing gear, and follow the blue needles. The procedure requires we climb to 1,000 feet, turn left on a heading of 350 degrees and climb further to 2,000 feet on our way to the ZIMOT holding pattern. We have enough fuel for two tries, but let's consider going to Boston Logan instead.

- Encourage open communication in the cockpit — If you are new to the crew, ensure everyone understands it is better to speak up when in doubt, and never shut down this kind of input unless it is inappropriate or distracting. If you aren't sure about a realtime critique, let it slide if you can and talk about it later. If the critique was in error, don't harp on it and remind everyone, "when in doubt, please speak up."

- React — If you've anticipated the situation well, you should have a Plan B on the shelf, ready to go. Otherwise, you will need to "trap the error" so that it doesn't create any more damage than it already has. This trapping process is normally aided by existing standard operating procedures, but sometimes you will be relying on your experiences to pull you through.

- If time permits, let the crew know what the problem is and what the SOP has to say about it. Encourage input and verify that everyone agrees that the SOP is the way to go.

- If there is no applicable SOP and time permits, either ask for input or announce what you believe should be the next steps. (Even if you know the right answer, asking for input is a good way to achieve "buy in" from the crew or to help younger crewmembers to develop decision making ability and confidence.)

- If time is critical, act according to SOP (if applicable) or as you believe is appropriate. Do not shut down crewmembers with better ideas if you can spare the time, but don't sacrifice the right (and safe) answer in an attempt to grow your options.

- Learn — If you had to improvise a solution because you didn't have an existing Plan B, you should remember to reconstruct your actions for future evaluation. Your solution may become the next pilot's procedure. Or you may need to adjust here and there.

- Keep trying — If your ad hoc solution didn't work, you need to adjust and try again until the solution works or you run out of time.

- Evaluate — When all is said and done, when you have a quiet moment, you need to gather the crew together to evaluate what happened, how well the Plan B worked (the one you had or the one you ended up with). Make sure the new Plan B is an improvement, and let everyone know. Your experience can save another crew.

- Finish every flight with a complete debrief, even if it was a "textbook" flight.

- I've found the best way to begin a debrief is with the U.S. Navy Blue Angels briefing starter, "I'm happy to be here." That is their acknowledgment that despite anything that is to follow, it is a privilege to be flying professionally.

- Then, if you are the captain, begin with the high points of the flight in general but then cover the things you did that could have gone better.

- Unless you have a formal instructor role, leave it at that and allow others to critique themselves. If you have an instructor role to play, you can see if the student covers what needs to be covered and you can steer the conversation as needed.

Example from one of my recent flights: "It was a good flight to Teterboro and I am happy to be here. It was a busy arrival and approach control threw us a few curve balls and I could have been more precise on the descent. They gave us a short descent and the FMS angle looked good. But it wasn't, of course, because ATC decreased the distance we had to go. I should have calculated the distance manually as soon as we got the clearance, but I didn't. As it was, I realized my mistake and the speed brakes saved me in the end, but it wasn't my finest effort." At that point the other pilot asked about techniques on estimating the descent angle and we had a good discussion. We'll both do better next time.

An Example

There is a "gotcha" in the Gulfstream G450 avionics that can end up catastrophically. Looking at how we came up with solution shows the iterative Plan B process in action.

- Aircraft delivery — The G450's avionics represented an iterative change from previous Gulfstreams in that there was a lot to be familiar with, but the interface had big changes. Those of us from the GV and earlier were used to an autopilot that would track an altitude to level off in a climb or descent, no matter how many times you changed the target altitude.

- We did not anticipate the problem and our first event was a gradual climb through an assigned altitude without the altitude alert chime going off.

- Our existing plan to avoid altitude busts was to insist on a sterile cockpit when within 1,000 feet of an assigned level off altitude. But we allowed both pilots to divert their attention for "official" duties, such as checking an oceanic clearance.

- We learned that our sterile cockpit rules needed to trap all events and adjusted our procedures to require the pilot flying only focus on flying the aircraft within 1,000 feet of a level off.

- We also realized our understanding of the airplane was deficient and started canvassing other G450 operators. (Sadly, others had experienced similar issues but were just as clueless about the cause.)

- Second event — Because we had instituted the "focus only" rule change, the next time the problem happened the pilot flying caught the autopilot error in the act. As it turns out, once the autopilot captures a desired altitude shown in the flight director's Altitude Select (ASEL) window, it enters a "pitch hold" mode until that altitude is captured. If a new ASEL is entered before the previous altitude is captured, the autopilot maintains the pitch until the aircraft stalls (in a climb), overspeeds or hits the ground (in a descent), or until the pilot selects a new vertical mode.

- During this event, our Plan B did capture the threat in the act.

- Our Plan B was simply to catch the problem and fix it, which we did.

- We learned what the problem was and adjusted our Plan B to require the pilot flying to confirm an autopilot vertical mode is selected whenever acknowledging a new target altitude.

- We did not find anything in any existing literature about the problem and confirmed the problem exists with Gulfstream.

- Current status — Our Current Plan B gets activated about once a month and it always works. I have written about the problem in Business & Commercial Aviation Magazine (Pilot Error: A How-to-Guide). Gulfstream has fixed the problem on later aircraft, but us G450 operators need to beware.

References

(Source material)

Cortés, Antonio; Cusick, Stephen; Rodrigues, Clarence, Commercial Aviation Safety, McGraw Hill Education, New York, NY, 2017.

Cortés, Antonio, CRM Leadership & Followership 2.0, ERAU Department of Aeronautical Science, 2008

Dekker, Sidney and Lundström, Johan, From Threat and Error Management (TEM) to Resilience, Journal of Human Factors and Aerospace Safety, May 2007

Helmreich, Robert L., Klinect, James R., Wilhem, John A., Models of Threat, Error, and CRM in Flight Operations, University of Texas