There were a few times in my Air Force career that I felt like I was the subject of a science experiment. The worst of these was when we pilots, navigators, and other crew members were required to sit in a tent and were exposed to a chemical gas. “They” say it was to train us to remain calm under attack, but I suspect the laboratory scientists were collecting data on how far they could push us, their compliant subjects. I started calling myself an Air Force lab rat. Laboratory rats, otherwise known as rattus norvegicus domestica, are bred and kept specifically for scientific research. Rats are cheap and the knowledge gained justified the inevitable losses. Ever since my lab rat days, I’ve been on the lookout for these science experiments that didn’t have my best interests at heart.

— James Albright

Updated:

2023-01-03

You might argue that being a lab rat is part of the price we in uniform had to pay, and I think there is truth in that. But I’ve never signed up for lab rat status as a civilian pilot, and yet I get that feeling now and then. Over the years I’ve come up with a few defense mechanisms and witnessed a few others from my fellow test subjects. I don’t think there is any ill intent from those conducting the tests, it is more about them trying to do their jobs without thinking about the impact on us doing ours. A few examples are in order.

1

The ATC lab rat

A friend of mine at American Airlines was on final to Chicago Midway’s Runway 31C at about 400 feet with clearance to land. There was a long line of aircraft on the ground waiting to take off on that, the longest runway in use. Tower instructed my friend to sidestep to Runway 31R, well inside their stable approach criteria. “We can’t do that,” they responded. “Go around.” So, they went around.

I wonder how many times that tower controller directed aircraft to do the low altitude sidestep and how many times the aircraft complied. One can imagine the scientist behind the microphone keeping a mental tally of the number of lab rats he or she was able to manipulate into the unsafe sidestep. Our American Airlines pilot performed perfectly, keeping his aircraft and passengers safe while refusing to clean up the controller’s mess.

2

The chief pilot's lab rat

Before I showed up at my first civilian flight department, they routinely flew from Houston, Texas to London, United Kingdom with a basic flight crew of two pilots and a flight attendant. The trip in our Challenger 604s required two legs and about 13 hours of crew duty time if everything went to plan. Then one day the company’s chief executive officer decided to go to Munich, Germany instead, causing the duty day to lengthen to 14 hours, right at our limit. That became the new routine. I flew this trip for my introductory flight with the flight department and was exhausted by the end of the day. I was 43 years old at the time and thought the ravages of old age had finally hit me. But that was nothing like the return, which clocked in at nearly 17 hours of duty time.

I told the chief pilot, my new boss, that I was unable to fly 17-hour days and would refuse that trip in the future. He told me that mine was the first complaint and that I would either have to adapt or find another job. I told him I would start the job hunt immediately. My name was taken off the schedule and the other pilots started asking questions. Seven of the eight remaining pilots marched into the chief pilot's office and said that they too would never again fly a 17-hour day with a basic crew. (The lab rats had mutinied.) The chief pilot had no choice but to start scheduling a crew swap in Boston to make the trip work. He made sure I was on the first trip where this happened so I could explain the reason for crew swap to the company CEO. I did just that and the CEO responded with a smile, “That’s good. I want you guys to be safe.”

3

The manufacturer's lab rat

One of the trite sayings that is universally accepted by the unsuspecting and understood to be false by those who think about these things is “safety first.” If safety was truly first in our profession, we would never take to the skies. That you cannot build an aircraft with zero risk makes complete sense, but would you knowingly fly an airplane where safety was compromised to increase the manufacturer’s profit? Take, for example, the need to keep the aircraft from stalling by providing the pilot a better idea about the wing’s Angle of Attack (AOA).

The angle of the aircraft’s AOA is the angle between the chord line of the wing and the relative wind. The chord line is simply the line between the front and back of the wing and the relative wind is the airmass relative to the aircraft. The pilot can’t really see either of these but should have a pretty good idea based on the aircraft’s behavior. Some aircraft will have an AOA indicator which, in my opinion, is the single most important instrument on any aircraft. Other aircraft, incredibly, will not have any such indicator but are likely to have a means of measuring AOA to trigger stall warning devices. The AOA can do nothing more than alert the pilot of an impending stall by shaking the stick or yoke, give the pilot a hint of what needs to happen by nudging the stick or yoke, or can even activate the flight controls if the pilot fails to. These are often called “stall barriers” because they are aimed at preventing the stall. Not having an AOA indicator or any of the other devices saves money, of course.

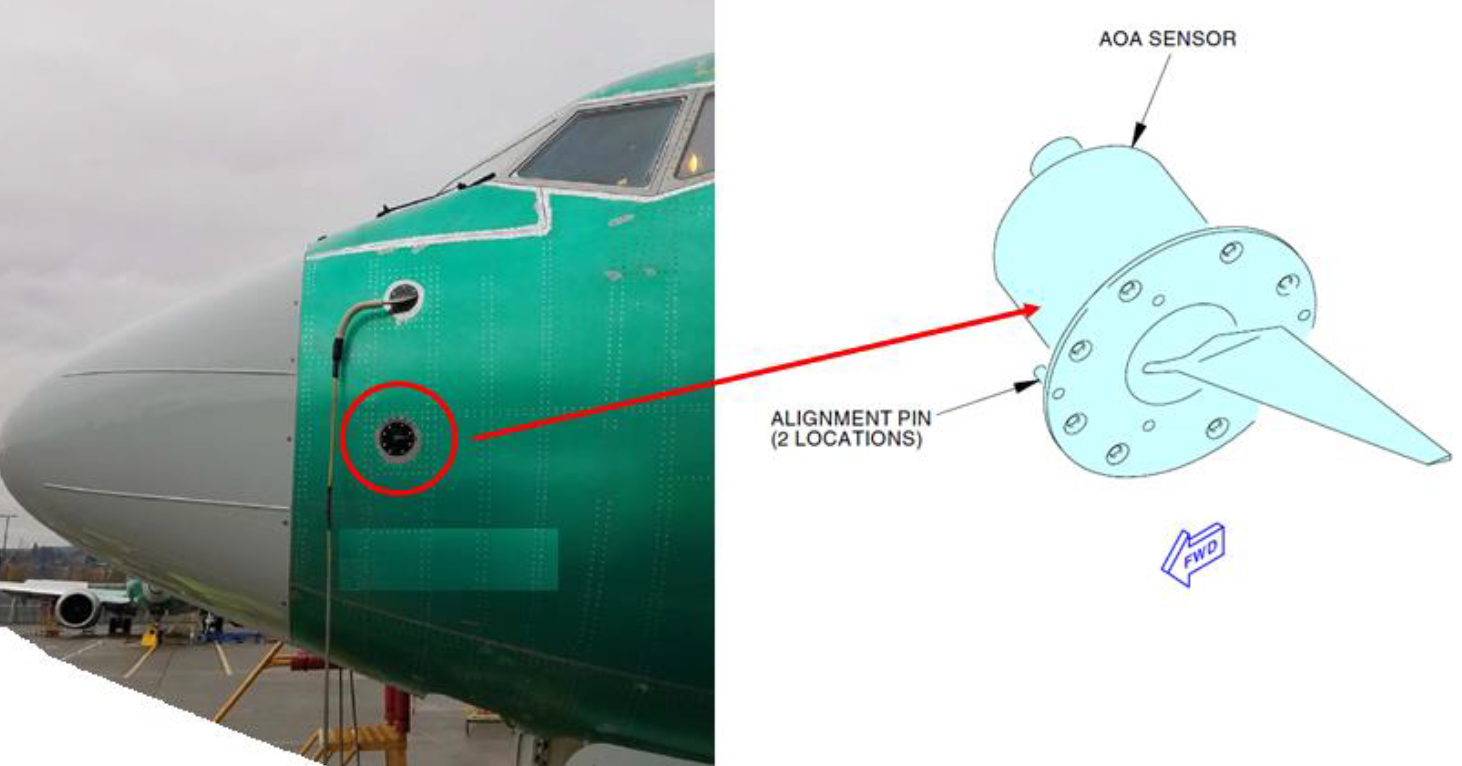

The simplest way to measure AOA is to stick a calibrated vane into the relative wind and measure the angle. These devices can get stuck at an extreme angle, can become jammed if hit by a bird, or can simply fail mechanically. This can be problematic if it causes the pilot or any of the automatic systems to think there is a stall when there isn’t or to believe there isn’t a stall when there is. The solution is to add redundant AOA measuring devices and stall barriers, which of course adds cost.

Any aircraft that allows its flight control system to overrule a pilot’s decision or to take control from the pilot under certain circumstances should obviously have a high level of redundancy to prevent the aircraft from making a wrong decision. In my aircraft, a Gulfstream GVII, for example, the fly-by-wire system will reduce the AOA on its own should I pull back on the stick too far. Instead of one mechanical AOA probe we have four pressure probes which measure pressure above, below, and to the side of each probe. This is the most redundant AOA system I’ve ever flown and may some day become the standard. For now, however, the standard appears to be two mechanical AOA vanes.

Thirty years ago, I was flying a Gulfstream III with two mechanical AOA vanes and two stall barrier systems. The problem is that if one system says the aircraft is in a stall and the other disagrees, what should it do? The answer was to do nothing, tell the pilot about the argument, and allow the pilot to decide. That idea, “let the pilot sort it out,” isn’t universal.

Angle of Attack (AOA) sensor,

KNKT.18.10.35.04, figure 7

In October 2018 a Lion Air Boeing 737 MAX 8 crashed, killing all 189 on board, causing much of the aviation world to suspect pilot error or, more charitably, poor pilot training. Five months later, it happened again to the same aircraft type flown by Ethiopian Airlines, this time killing all 157 on board. Examining the cockpit voice and flight data recorders made it clear that the aircraft was forcing the nose down and the pilots were trying and failing to prevent that. The aircraft was grounded by the U.S. Federal Aviation Administration (FAA) for two years and the acronym MCAS became infamous in the aviation world.

Boeing’s current website tells us that the Maneuvering Characteristic Augmentation System (MCAS) was designed and certified for the 737 MAX “to enhance the pitch stability of the airplane — so that it feels and flies like other 737s.” MCAS is designed, the page tells us, “to activate in manual flight, with the airplane’s flaps up, at an elevated Angle of Attack (AOA).” Most telling: “Boeing has developed an MCAS software update to provide additional layers of protection if the AOA sensors provide erroneous data.” Among the additional layers: “Flight control system will now compare inputs from both AOA sensors.”

If you are wondering what kind of money savings were made by installing two AOA sensors but only using one at a time, you are not alone. Boeing offered an AOA indicator for the Pilot’s Flight Display (PFD) which would also provide an “AOA DISAGREE” message in the case of an AOA disagreement from the two sensors. In my opinion, since the added AOA indicator was written with software and didn’t involve added hardware, it was a way to generate increased revenue by making it an option and not standard equipment. The very existence of the MCAS system was an attempt to avoid having to go through certification for a new aircraft type and to entice airlines to use the MAX with already typed pilots. Pilot training for MCAS differences was nothing more than a few paragraphs in the manual and discussions in class. An errant MCAS activation, it could be argued, was so much like a runaway pitch trim — what happens when the stabilizer trims the aircraft’s pitch nose down on its own, something that could happen on the earliest 737s — that runaway pitch trim training would suffice.

Because Lion Air didn’t pay for the AOA indicator, its pilots would not have received the AOA DISAGREE warning when an open circuit in one of their AOA sensors failed and caused the MCAS to activate. The pilots made a few mistakes and should have been able to deal with the MCAS problem using runaway pitch trim procedures. But the airline is said to have taken shortcuts in training too. Writer William Langeweishe was told, “In Indonesian simulators, there are sometimes seven in there: two pilots flying, one instructing and four others standing up and logging time.”

As if the story behind MCAS isn’t tangled enough, one has to wonder how the changes to the 737 type got certified in the first place. Under rules present at the time of the 737 MAX’s certification, a program called Organization Designation Authorization allowed the FAA to supplement its staff with employees provided by the manufacturer. The FAA saved the funds required to train and maintain the needed expertise to properly oversee Boeing.

It appears that the initial cadre of Boeing 737 MAX crews were lab rats being studied by Boeing. “I wonder how far back we can pare safety systems and training?” The Indonesian pilots were lab rats for study of their airlines. “Let’s see how many shortcuts in training we can take.” The crews and the public at large were lab rats under the supervision of the FAA. “If we allow the fox to supervise the hen house, we can get our jobs done with less cost and effort.”

More about this: Lion Air 610.

4

Lab rat survival techniques

My friend who refused the Midway Airport sidestep admits that he once accepted the sidestep and that taught him that going around was preferable to what happened when he had to salvage the unstable approach. I only found the backbone to refuse the illegal duty day scenario after sheepishly accepting the schedule without complaint.

In all these situations, the best way to understand you are being asked to become a lab rat is to know the history of the many laboratory specimens who have preceded you. What can happen following a last minute sidestep? How has crew fatigue turned a long duty day into an even longer accident investigation? What are the weaknesses of any brand new aircraft systems in your aircraft?

The solution for these lab rat experiments is to let the people in the lab coats know that their test results are available without the risk and that the high cost of failure makes the experiment foolhardy. For example, you don’t have to weigh the pros and cons of pushing your pilots beyond duty limits if you understand alternatives are available and the extra costs are insignificant compared to losing the aircraft and all onboard. As pilots, the best defense is a two-part strategy: (1) understand accident histories of similar aircraft and operations, and (2) understand your aircraft procedures thoroughly. Step 1 will help you to avoid becoming a lab rat. Step 2 can save you when step 1 fails.

References

(Source material)

Aircraft Accident Investigation Report, PT.Lion Mentari Airlines, Boeing 737-8 (MAX); PK-LQP Tanjung Karawang, West Java, Republic of Indonesia, 29 October 2018, Komite Nasional Keselamatan Transportasi (KNKT.18.10.35.04), Republic of Indonesia