I was introduced to my first flight director in 1979 and thought it was a cosmic computer that could think faster than me and was a sign of things to come. I later learned it didn’t think at all but it did herald changes in the near future. That early flight director was in the Northrop T-38 Talon and it wasn’t really a computer at all, since it simply took analog signals and turned those into the movement of mechanical needles. It did some cosmic things, but it wasn’t a computer.

— James Albright

Updated:

2021-06-26

A real computer deals with data in the digital world. I never really had a computer in the cockpit until introduced to my first Inertial Navigation System (INS). That’s when I learned to appreciate the advantages of the digital age to come, but also some of the pitfalls. We learned early on the “garbage in, garbage out” phenomena of computer data.

In 1989 I was flying an Air Force Boeing 747 between Anchorage, Alaska and Tokyo, Japan using what is now called North Pacific (NOPAC) R220, the northern most route. I was especially concerned with navigation since the Soviet Union didn’t want any U.S. Air Force airplanes so close to its facilities in Petropavlovsk on the Kamchatka Peninsula and we would be flying within about 100 nautical miles. This was just six years after the Soviets shot down another Boeing 747 flying in the same area, the infamous Korean Air Lines 007 incident. Perhaps “concerned” is an overstatement. I had on board three Delco Carousel INSs, commonly called the “Carousel IV,” as well as two navigators. The Carousel was standard equipment back then for many Air Force transport aircraft, as well as civilian Boeing 747s. So, what could go wrong? The Carousel IV’s biggest limitation was that it could only hold 9 waypoints and each had to be entered with latitude and longitude using a numeric keypad. Our navigators would load up all 9 waypoints and once a waypoint was two behind us, they would program the next one to take its place.

Waypoint entry seemed simple at the time. You selected “Way Pt” from a dial, moved a thumbwheel to the desired point, hit the number key for the cardinal direction (2=N, 6=E, 8=S, or 4=W) and then typed in the coordinates. Our lead navigator carefully inputted the first 9 waypoints before we departed, and I dutifully checked each. Another crew took our place after departure and after passing waypoint 4, the new navigator entered new waypoints 1, 2, and 3. Passing waypoint 8 we did another crew swap and as I was getting settled in my seat the aircraft turned sharply to the right, directly to Kamchatka Peninsula. I clicked off the autopilot and returned to course as the navigator said, “pilot, why did you turn right?” After a series of accusations followed by another series of “it wasn’t me” claims, we figured it out. The new waypoint 1 was entered as N51°30.5 W163°38.7, which comes to a point along the Aleutian Islands, behind us and to the right. The second navigator confessed that he had spent most of his career punching in “W” for each longitude and while his eyes read “E163°38.7” his fingers typed “W163°38.7” instead.

If you are flying something more modern than a Carousel IV INS, chances are you don’t have to manually type in coordinates. Even if you do, chances are you have a better keyboard that doesn’t require using a numeric keypad for letters. So, what can go wrong in your high tech “idiot proof” airplane? Lots. Even in our digital world, the “garbage in, garbage out” problem can have dire implications. It remains our primary duty as pilots to aviate, navigate, and communicate.

1

Aviate

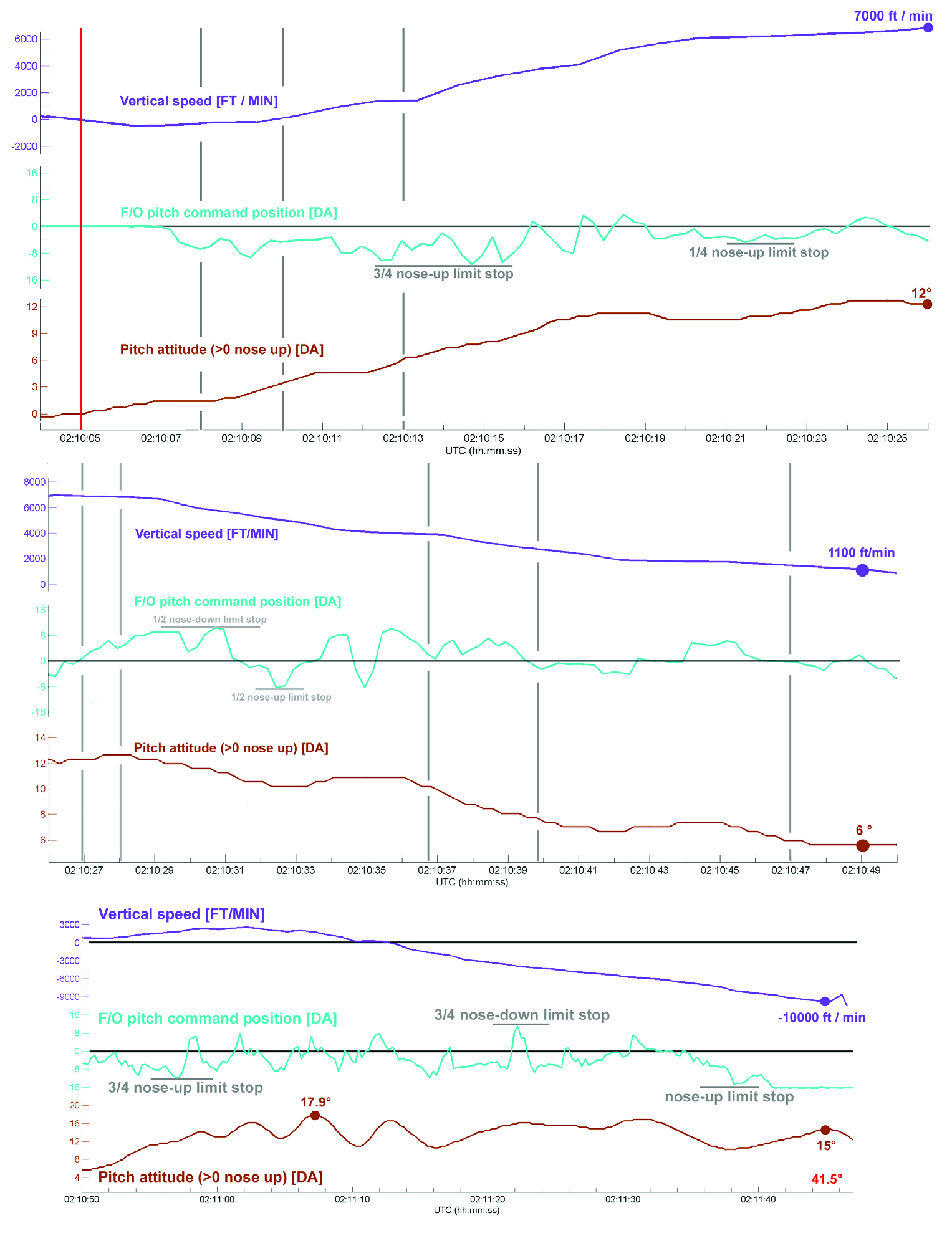

Air France 447 Pitch Data

(BEA report 1 Jun 2009)

The tragic end to the June 1, 2009 flight of Air France 447 has become a standard case study for aircraft automation and Crew Resource Management (CRM) researchers. The captain was in back during an authorized break and two first officers were left alone in the cockpit. A momentary loss of pitot/static information due to ice crystals at 35,000 feet caused the autopilot and autothrust to disengage. The pilots did not have any airspeed information for 20 seconds before at least one instrument recovered. The Pilot Flying (PF) pulled back on the stick and raised the pitch from around 2° to 12°, climbing 2,000 feet and stalling the aircraft. The Pilot Not Flying (PNF) took control but the PF took control back. In this Airbus, control “priority” was taken by pressing a button on the stick. An illuminated arrow in front of the pilot turns green to indicate which stick has priority but in the heat of battle the PNF thought he had control when he didn’t. Four and a half minutes later the aircraft hit the ocean, killing all 240 crew and passengers. The last recorded vertical velocity was minus 10,912 feet/min.

Much has been made of the lack of side stick feedback which allowed one pilot to pull back while the other pushed forward, making it hard to discern who had control without proper callouts or a careful examination of the flight instruments. Human factors scientists have also made note of the startle factor and other forms of panic which handicapped the two inexperienced pilots. The first officer flying had less than eight years of total experience and around 3,000 hours of total time.

Very few of us who have graduated to high altitude flight have spent much time hand flying our aircraft where the high and low speed performance margins of our aircraft narrow. After accidents like these there are often cries for more hand flying, ignoring the regulatory requirements for using autopilots in Reduced Vertical Separation Minima (RVSM) airspace or the problems with having unbelted crew and passengers while an inexperienced pilot is maintaining the pitch manually. I’m not sure more hand flying would have cured the problem here. A quick look at the pilot’s control inputs reveals a fundamental lack of situational awareness.

More about this accident: Case Study: Air France 447

Pilots with considerably more experience flying large aircraft at high altitude will recognize the problem immediately. Before losing airspeed information, the autopilot was flying the aircraft with the pitch right at about 2.5° nose up. This is fairly standard for a large aircraft at high altitudes, but it isn’t universal. The first officer flying this Airbus pitched up to 12° nose up until told by the other first officer repeatedly to “go back down.” He then raised the nose to as high as 17° nose up and never lowered it to below 6° nose up. Even his minimum pitch level may have been too high to sustain level flight at their altitude.

We can allow the automation and human factors experts to investigate fixes to the computers and ways to improve the CRM. But there is a more basic fix to these kinds of flight data problems. We as pilots need to understand how to fly our aircraft as if the automation isn’t there. That means knowing what control inputs will create the desired performance.

Here is a quiz you should be able to pass with flying colors:

- What pitch and thrust setting is needed to sustain level flight at your normal cruise altitudes and speeds?

- What pitch is necessary to keep the aircraft climbing right after takeoff with all engines operating at takeoff thrust?

- What pitch is necessary to keep the aircraft climbing right after takeoff with an engine failed?

The answers were drilled into us heavy aircraft pilots many years ago, before the electrons took over our instruments. A blocked pitot tube can make an altimeter behave nonsensically so pilots were schooled to memorize known power and pitch settings. Most large aircraft will lose speed if the pitch is raised above 5° nose up at high altitudes. After takeoff with both engines operating, the answer is likely to be 10° nose up or a bit more for some aircraft. With an engine failed, you might lose a few degrees. Of course, these numbers change with aircraft weight and environmental conditions, but you should have a number in mind.

2

Navigate

I’ve had a profound distrust of VHF omnidirectional Radio Range (VOR) navigation ever since 1993 when I found myself looking at a navigation signal that was somehow bent about 20 degrees to the south. I was flying a team of peacekeepers between two countries of the former Soviet Union aboard an Air Force Gulfstream III (C-20B) and it seemed there were no shortage of countries in the region who wanted our mission to fail. Our practice was to couple the navigation system to our laser ring INSs displayed in the captain’s instruments, with any raw navigation signals displayed in the first officer’s side. I knew about “meaconing,” which the Department of Defense defines as an enemy action to intercept and rebroadcast radio signals in an attempt to confuse an adversary, but I didn’t expect it. We were confused but our INS, terrain charts, and the clear skies kept us on course and away from those who wanted us to drift into enemy territory.

Of course, the accuracy of INS those days was rather suspect. The Global Positioning System (GPS) changed everything, and it seemed that we ditched ground-based navigation aids almost overnight. So, nothing can go wrong now! Or can it?

The meaconing we worried about in a military context is only one part of the problem and GPS is not immune. The rest of the problem is known as “MIJI,” which stands for meaconing, intrusion, jamming, and interference. We are often warned of GPS outages through NOTAMs with instructions to avoid certain chunks of airspace. This can be more than a navigation problem, however. In 2016 operators of Embraer Phenom 300 business jets were warned of potential problems with flight stability controls resulting from GPS interference.

Some international operations course lightly touch the subject of dead reckoning in the event of a complete loss of GPS but I would bet few pilots know how and some may not even be familiar with the term. If you don’t feel confident you can safely find the European continent after losing GPS halfway across the North Atlantic, you should brush up on your dead reckoning skills.

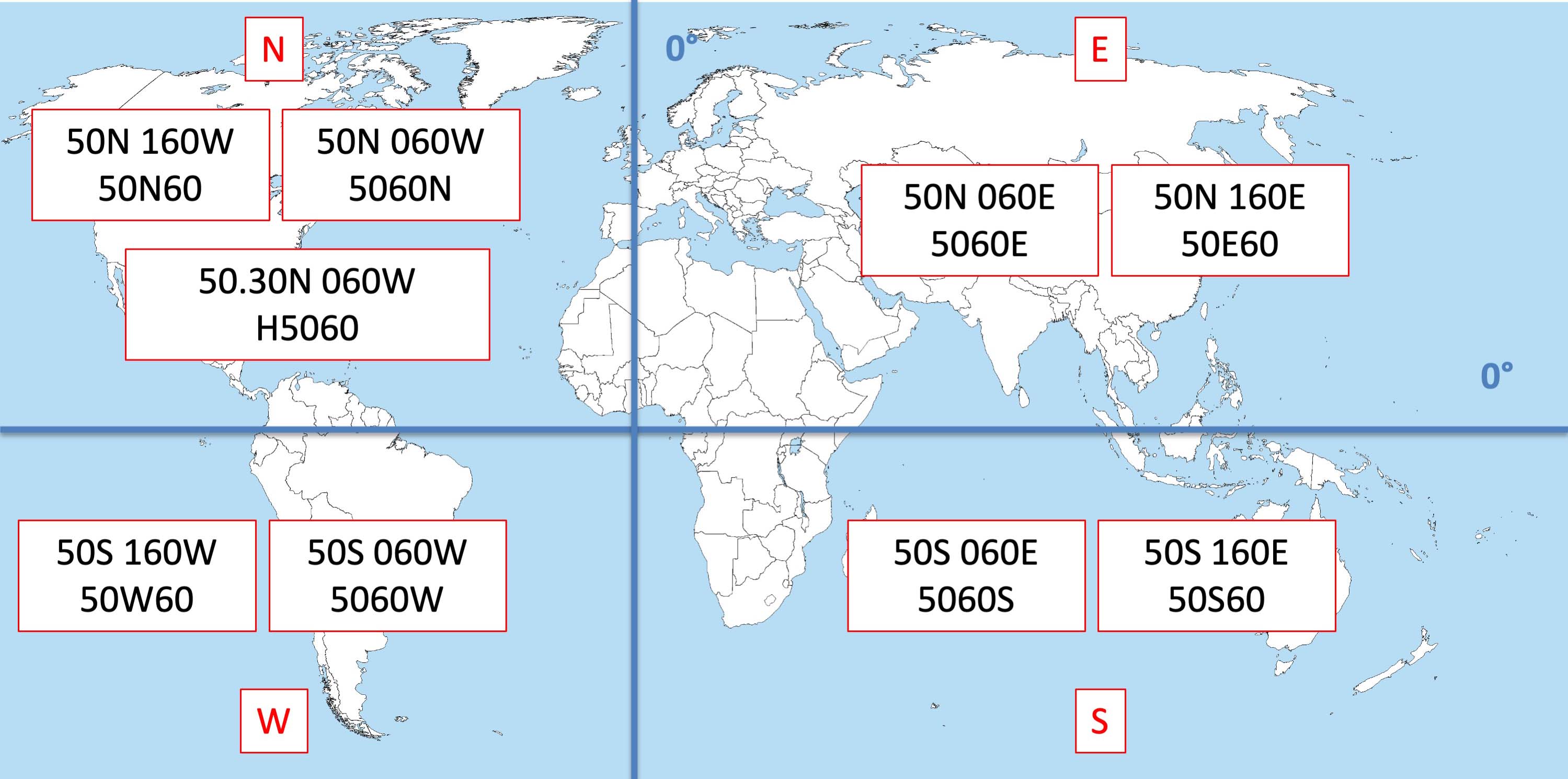

The problem can be harder to detect than a faulty, jammed, or missing GPS signal. It might be as simple as a Flight Management System (FMS) programming error that takes place outside your cockpit. When the North Atlantic Track System (NATS) adopted route spacing based on half degrees of latitude, the naming convention used by most FMSs changed. A part of the ARINC 424 specification used four numbers and a letter to pinpoint an oceanic waypoint. The position of the letter located the hemisphere of the coordinates. “5250N,” for example, means N 52°00’ W 50°00’. Moving the “N” changes everything so that “52N50” means N 52°00’ W 150°00’ on the other side of the world. When the NATS adopted half degrees of latitude, the ARINC 424 specification added a new place to put the “N” to denote the new position. “N5250,” for example, meant N 52°30’ W 50°00’. It was so subtle a change, pilots immediately missed it and navigation errors along the NATS became more frequent. The solution adopted added the letter “H” to the mix. In our example, “H5250” means N 52°30’ W 50°00’.

More about this: ARINC 424 Shorthand

Regardless of the source of confusion, we pilots need to arm ourselves against these kinds of data errors. As our airspaces becomes more crowded, navigation accuracy is more than ever a matter of safety of flight.

- Apply oceanic planning techniques to all flights by comparing waypoints, Estimated Time En Route, distances, and fuel figures between the computed flight plan and FMS.

- Check automatically produced takeoff and landing data (from the FMS, for example) with an independent source, such as AFM tables or a standalone application.

- Always keep the “big picture” in mind when looking at the route of flight shown on your navigation displays. For example, if a trip from Miami to New York includes a long leg heading west, you might have typed a waypoint incorrectly or it may have been coded incorrectly in the FMS database.

- Always keep the “close up” view in mind at each waypoint. Standard Instrument Departures, for example, often start in the wrong direction to blend in with other procedures. You should review those initial turns before takeoff.

- Let Air Traffic Control know if a GPS outage is creating flight anomalies you cannot otherwise explain. The 2016 issue with the Phenom 300 was a result of the aircraft’s yaw damper integration with GPS-reliant systems. Controllers may know of local military exercise activity and might be able to get your GPS signals back for you.

- Understand your aircraft procedures when dealing with unreliable or missing GPS signals. Your Minimum Equipment List may say you can under some conditions fly without GPS, but what are the procedures?

3

Communicate

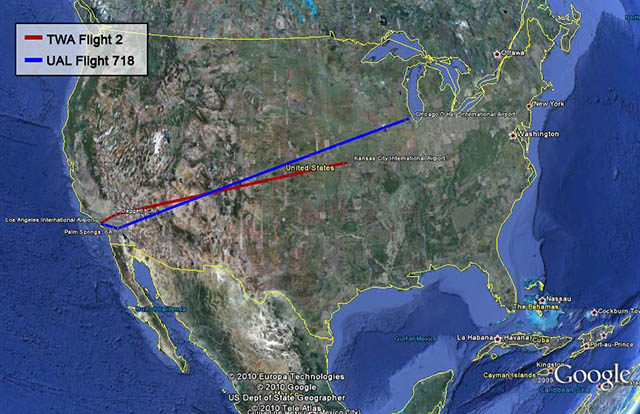

As late as the 1950s, en route air traffic control consisted of pilots guaranteeing their own separation by looking out the window with no communications between aircraft or with an air traffic controller. This was the “Big Sky Theory” in action: lots of sky, few airplanes. What can go wrong? On June 30, 1956, a United Airlines Douglas DC-7 and a Trans World Airlines Lockheed 1049A collided somewhere over Arizona. The Civil Aeronautics Board determined the official cause was that “the pilots did not see each other in time to avoid the collision.” These days we guarantee en route separation through communications and surveillance, and that often means Controller Pilot Data Link Communications (CPDLC).

More about this: Case Study: TWA 2 and United Airlines 718

If you started flying before CPDLC, you probably treated the new technology with suspicion. I remember the first time I received a clearance to climb via data link, I was tempted to confirm the instruction via HF radio. “Did you really clear me to climb?” But as the years have gone by, the novelty has worn off and we accept these kinds of clearances without a second thought. I should have kept up my skepticism back then, the aircraft I was flying at the time did not have a latency monitor.

Latency, lā ten sē. noun. The state or quality of being latent (remaining in an inactive or hidden phase).

We were assured in training that if we were to receive data link instructions to set our latency timer we could cheerfully say “latency timer not available” and be on our way. It was an unnecessary piece of kit and we didn’t have to worry about it.

On September 12, 2017, an Alaskan Airlines flight had a Communications Management Unit (CMU) problem that meant an ATC instruction to climb never made it to the crew. On the next flight, the CMU power was reset and corrected the issue, and the pending message was delivered. The CMU did not recognize the message as being old, and so it was presented to the flight crew as a clearance. The crew dutifully climbed 1,000 feet.

An ATC clearance, like “Climb to and maintain FL 370,” is obviously meant for “right now” and certainly not for “sometime tomorrow.” The Alaskan Airlines flight had a problem with its Iridium system which has since been corrected. But a latency timer would have checked to make sure the clearance was issued within the required time frame and would have rejected it.

Having controllers issue instructions to the wrong aircraft or a pilot accepting a clearance meant for someone else is nothing new. But the speed of datalink communications opens entirely new opportunities for this kind of confusion. The fix, once again, relies on a large dose of common sense:

- Ensure datalink systems are programmed with the correct Flight Identifications and that these agree with those in the filed flight plan.

- Understand flight manual limitations which degrade datalink systems and do not fly using datalink systems that are no longer qualified as certified.

- Apply a “sanity check” to each air traffic clearance and query controllers when a clearance doesn’t make sense. The controller may indeed want you to descend early during your transoceanic crossing, but that would be unusual.

4

Envelope protection

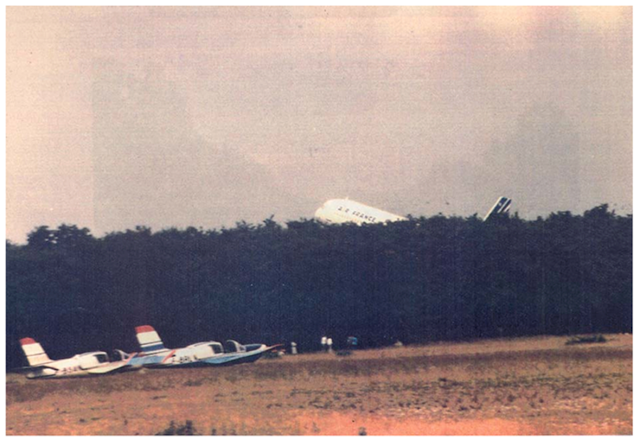

Air France 296, moments before impact,

from BEA, Annex 1.

I once wrote in my maintenance log, “Cockpit went nuts” because it was the most apt description of what had happened. We were speeding down the runway around 110 knots, just 15 short of V1, when every bell and whistle we had started to complain. The Crew Alerting System was so filled with messages it looked like someone hit a test button we didn’t have. It turned out one of the Angle of Attack (AOA) vanes became misaligned with the wind momentarily but managed to sort itself out by the time we rotated. It became a known issue and the manufacturer eventually replaced all of the faulty AOA vanes in the fleet. In that aircraft, AOA was a tool for the pilots but it couldn’t fly the aircraft other than push the nose forward if we stalled. That was on an aircraft with a hydraulic flight control system where the computers could not overrule the pilots. Things are different in fly-by-wire aircraft.

On June 26, 1988, one of the earliest Airbus A320s crashed in front of an airshow crowd at Basel/Mulhouse-Euro Airport (LFSB), France. The crew misjudged their low pass altitude and the aircraft decided it was landing when the pilots intended a go around. The aircraft didn’t allow the engines to spool up in time to avoid the crash, which killed 3 of the 136 persons on board. I remember at the time thinking it was nuts that this fly-by-wire aircraft didn’t obey the pilot’s command to increase thrust.

More about this: Case Study: Air France 296

Three decades later I got typed in a fly-by-wire aircraft for the first time, a Gulfstream GVII-G500. I thought the G500’s flight control system was far superior to that on the Airbus, or that of any other aircraft. And that still might be true, but the G500 is not above overruling the pilot’s intentions.

In 2020 a GVII-G500 suffered a landing at Teterboro Airport (KTEB), New Jersey, that was hard enough to damage part of the structure. It was a gusty crosswind day and the pilots did not make and hold the recommended “half the steady and all the gust” additive to their approach speed. (The recommended additives have since been made mandatory on the GVII.) The pilots compounded the problem with rapid and full deflection inputs. The airplane flight manual, even back then, included a caution against this: “Rapid and large alternating control inputs, especially in combination with large changes in pitch, roll or yaw, and full control inputs in more than one axis at the same time, should be avoided as they may result in structural failures at any speed, including below the maneuvering speed.”

That is good advice for any aircraft, fly-by-wire or not. But it reminds us who are flying data driven aircraft that we not only need to ensure the data input is accurate and that the output is handled correctly, but that we understand the process from input to output. In each of our aviate, navigate, and communicate tasks, it is important to compare the data-driven process to how we would carry out the same tasks manually. If the black boxes are doing something you wouldn’t do, you need to get actively involved. Having the aircraft turn on its own ninety degrees from course is an obvious problem that needs fixing. But many of our data problems are subtle or even hidden. You cannot assume the computers can read your intent; they must be constantly monitored. The “garbage in” problem is more than a problem of you typing in the wrong data, the garbage can come from aircraft sensors, other aircraft computers, from sources outside the aircraft, even from the original design. Even when you are the Pilot Flying (PF) the aircraft, you should always be the Pilot Monitoring (PM) the aircraft systems.

References

(Source material)

Bureau d'Enquetes et d'Analyses (BEA), Annee 1990 No 28, Accident on June 26, 1988, at the at Mulhouse-Habsheim (68) to the Airbus A320, registered F-GFKC.

Final Report on the Accident on 1st June 2009 to the Airbus A330-203 registered F-GZCP operated by Air France flight AF 447 Rio de Janeiro - Paris, Bureau d'Enquêtes et d'Analyses (BEA) pour la sécurité de l’aviation civile, Published July 2012.